Table of Contents¶

1 Requirements and helper functions

1.1 Requirements

1.2 Mathematical notations for stationary problems

1.3 Generating stationary data

1.4 Mathematical notations for piecewise stationary problems

1.5 Generating fake piecewise stationary data

2 Python implementations of some statistical tests

2.1 Monitored

2.2 CUSUM

2.3 PHT

2.4 Gaussian GLR

2.5 Bernoulli GLR

2.6 Sub-Gaussian GLR

2.7 List of all Python algorithms

3 Comparing the different implementations

3.1 Generating some toy data

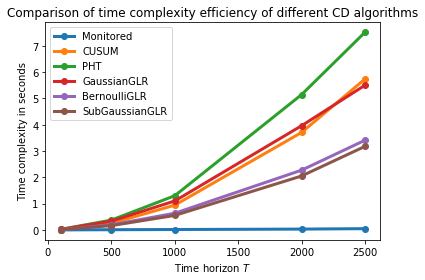

3.2 Checking time efficiency

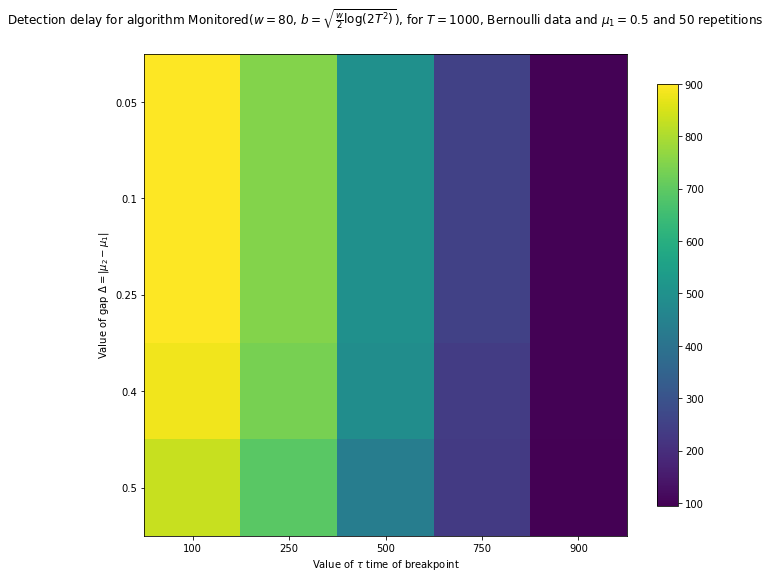

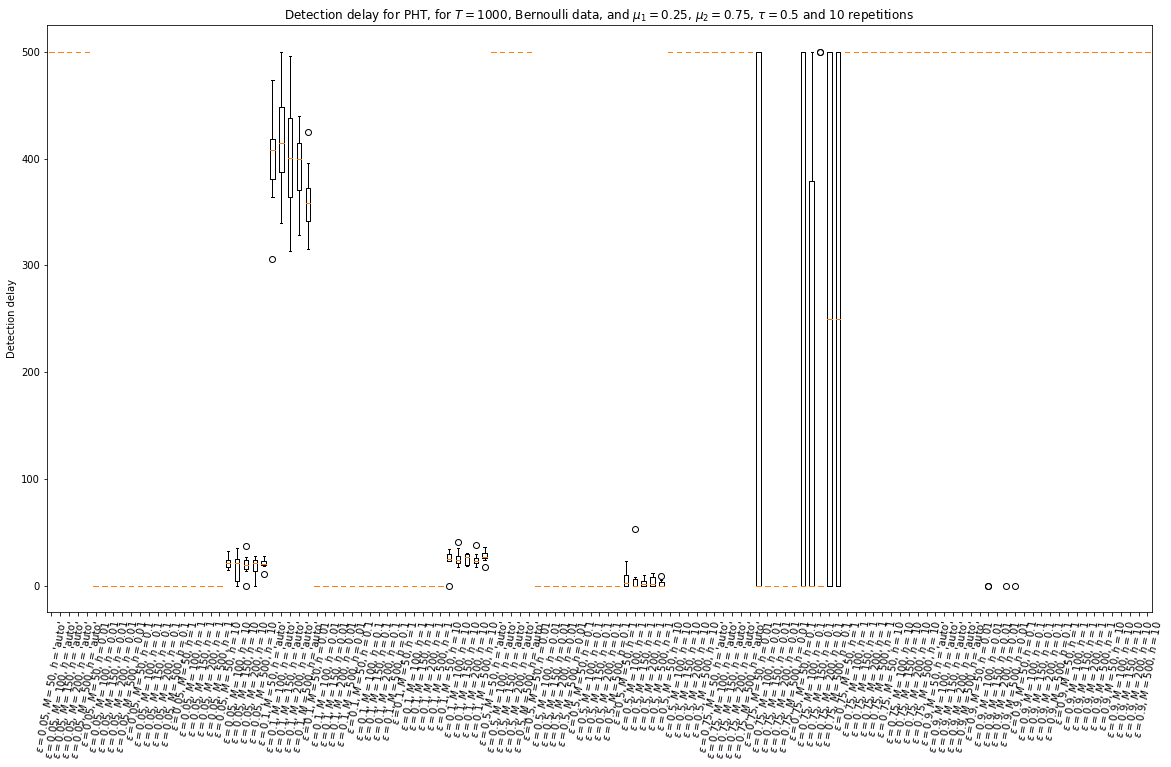

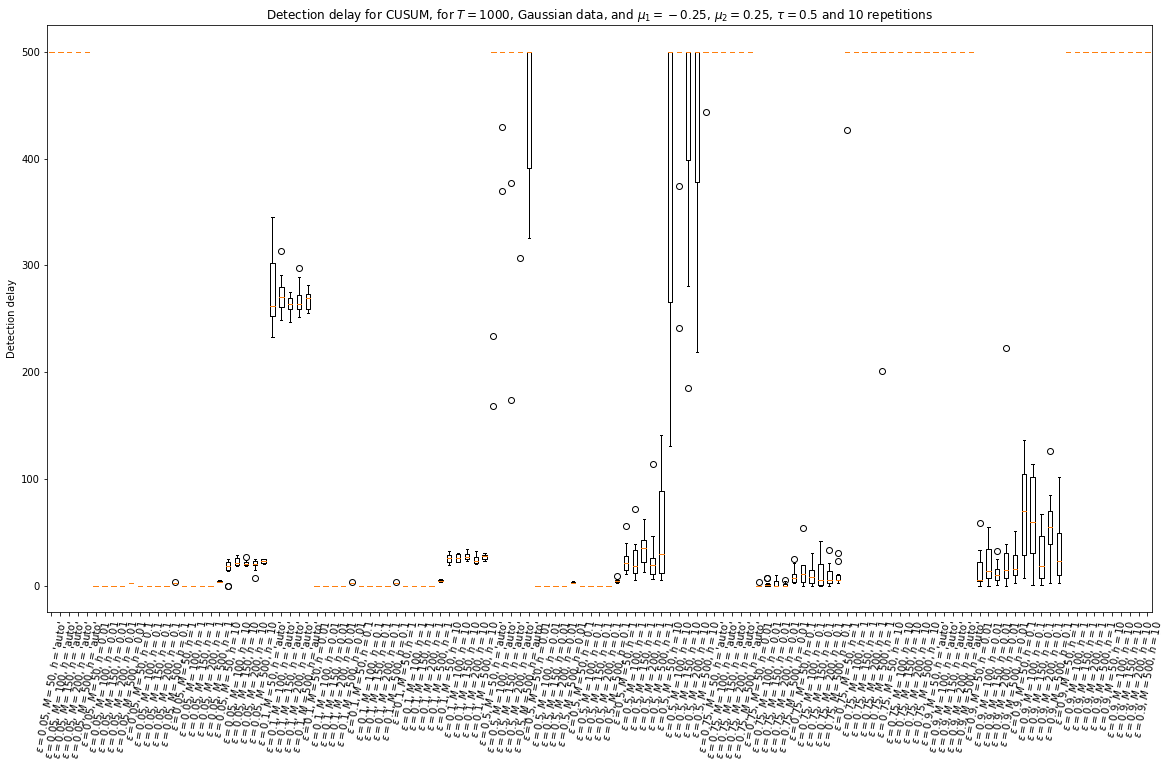

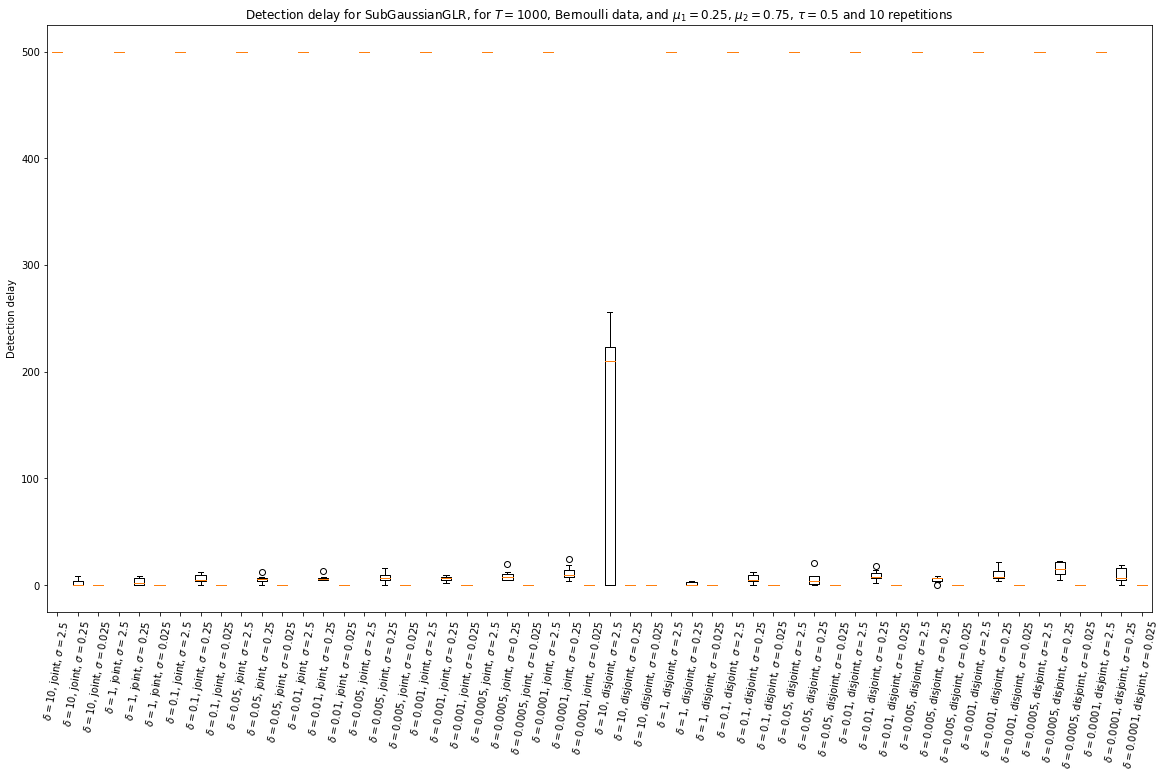

3.3 Checking detection delay

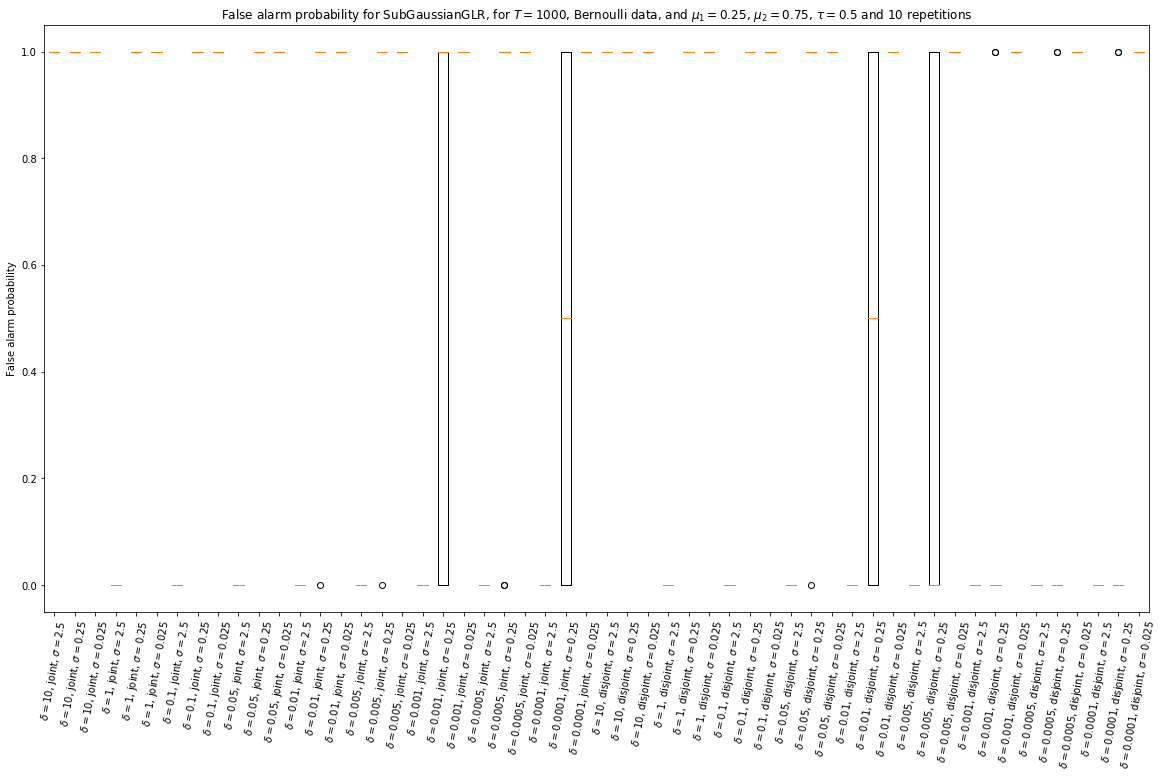

3.4 Checking false alarm probabilities

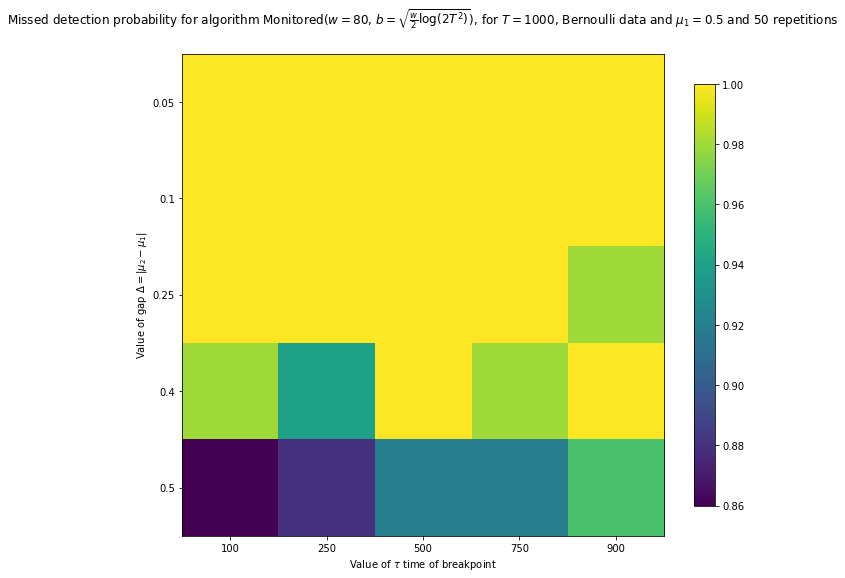

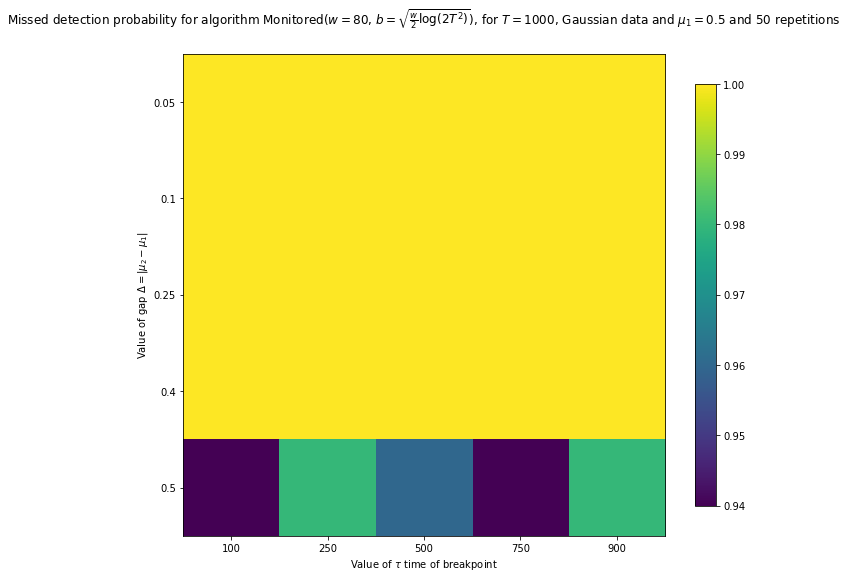

3.5 Checking missed detection probabilities

4 More simulations and some plots

4.1 Run a check for a grid of values

4.2 A version using joblib.Parallel to use multi-core computations

4.3 Checking on a small grid of values

4.4 Plotting the result as a 2D image

4.4.1 First example

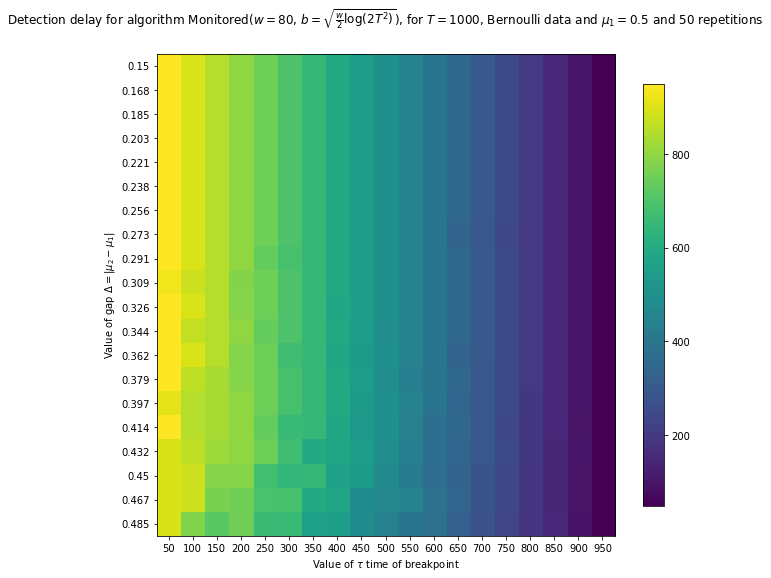

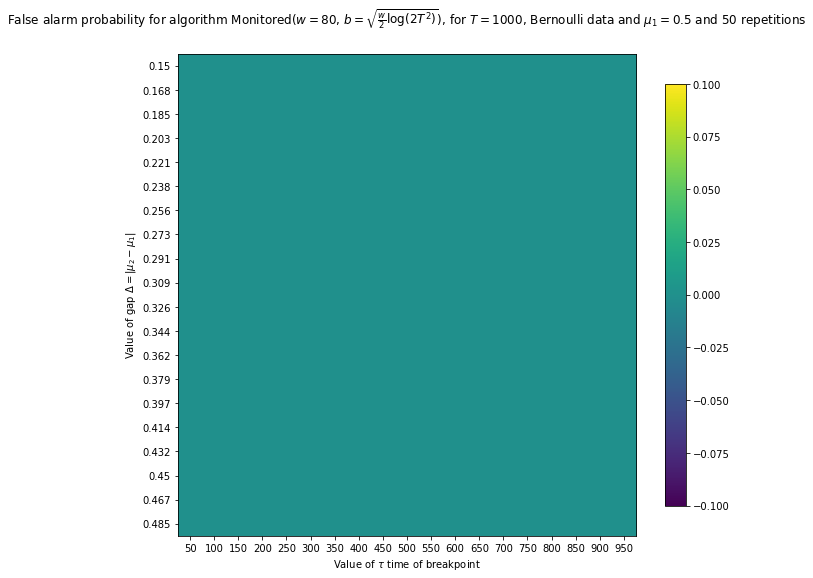

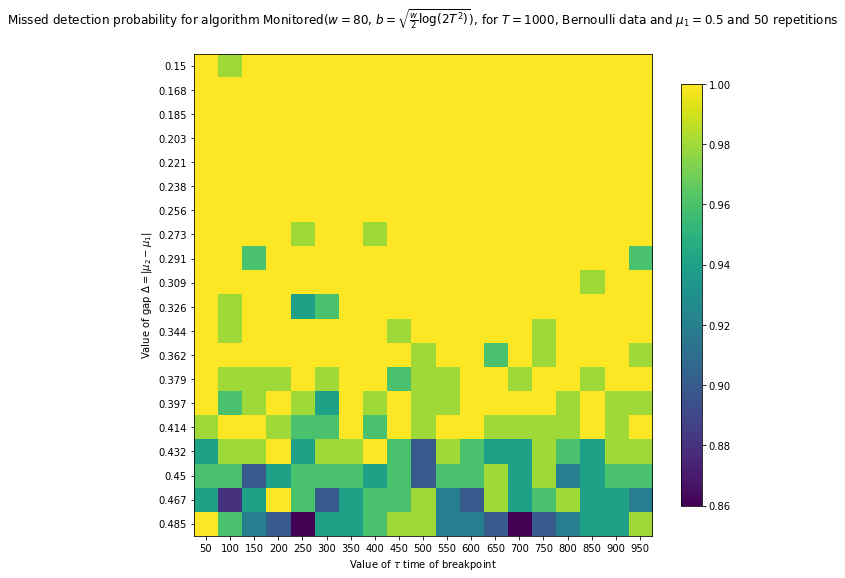

4.4.1.1 For Monitored

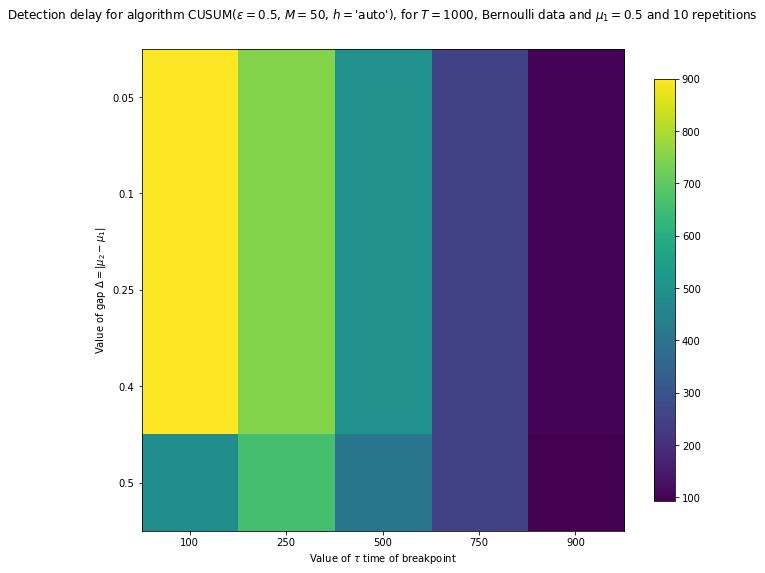

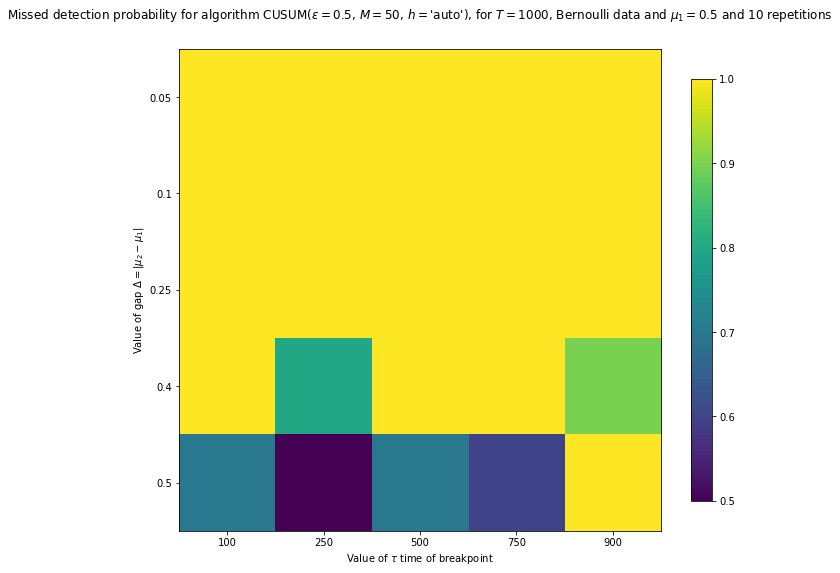

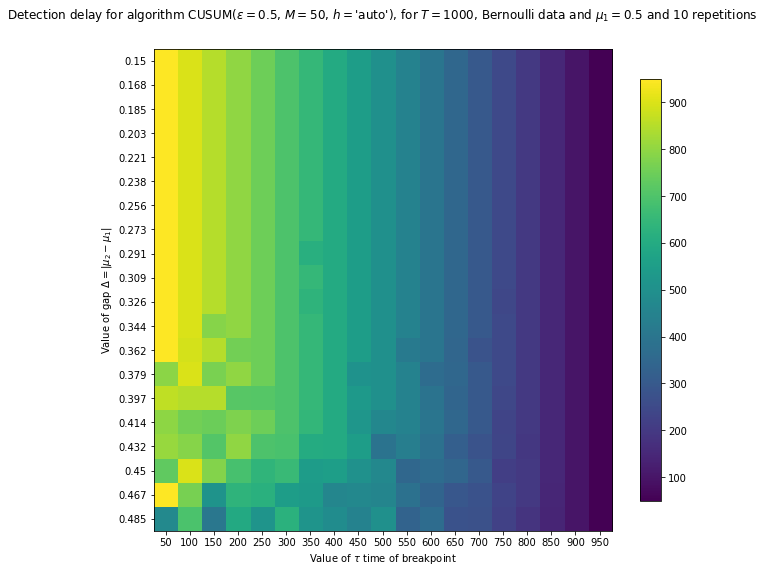

4.4.1.2 For CUSUM

4.4.2 Second example

4.4.2.1 For Monitored

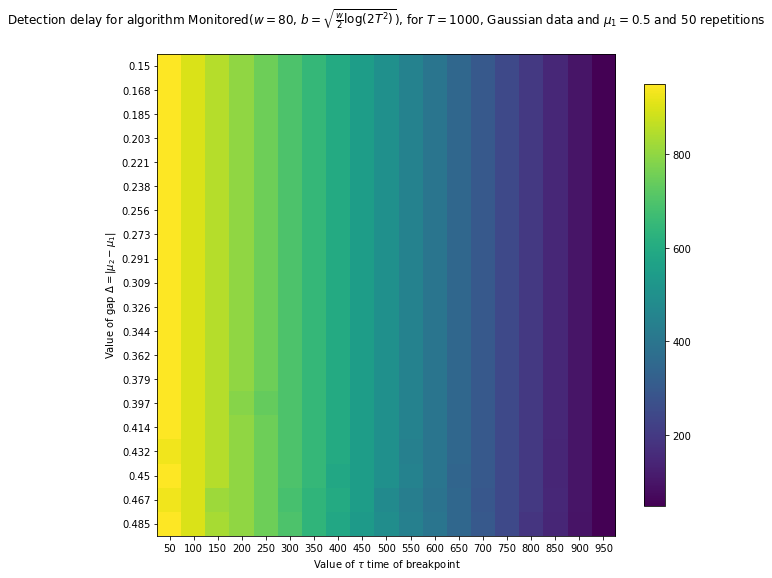

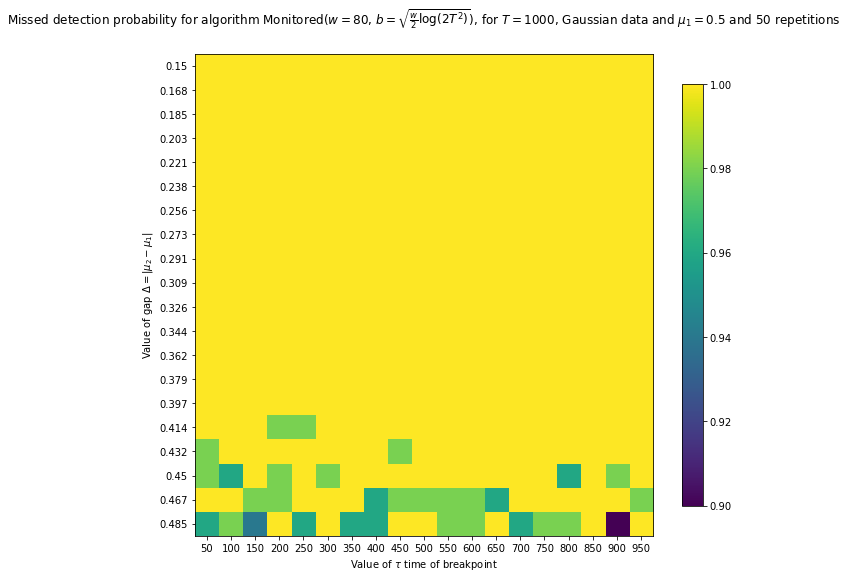

4.4.2.2 For Monitored for Gaussian data

4.4.2.3 For CUSUM

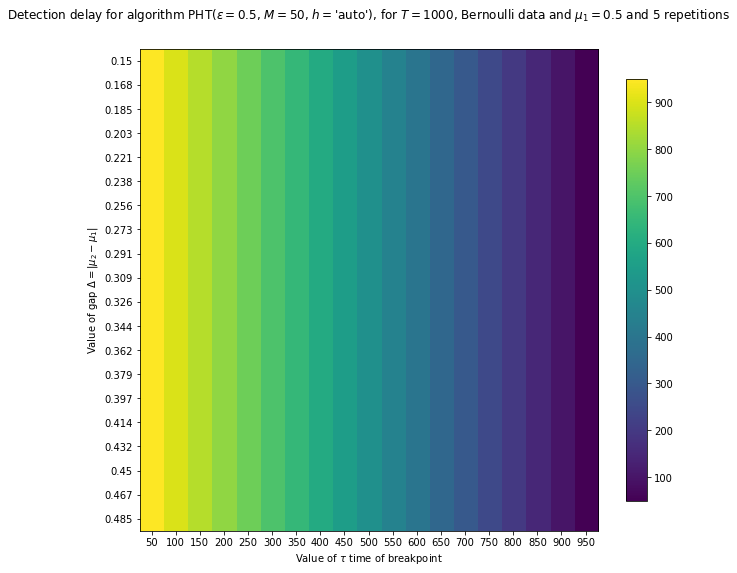

4.4.2.4 For PHT

4.4.2.5 For Bernoulli GLR

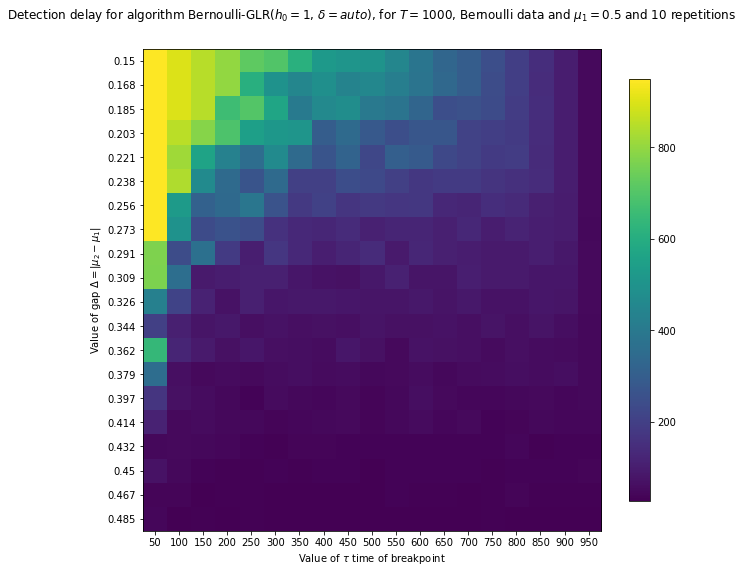

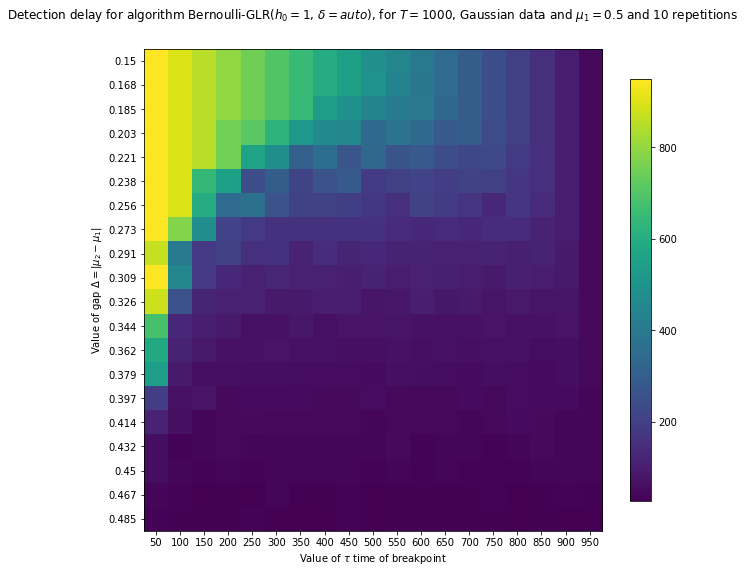

4.4.2.6 For Gaussian GLR

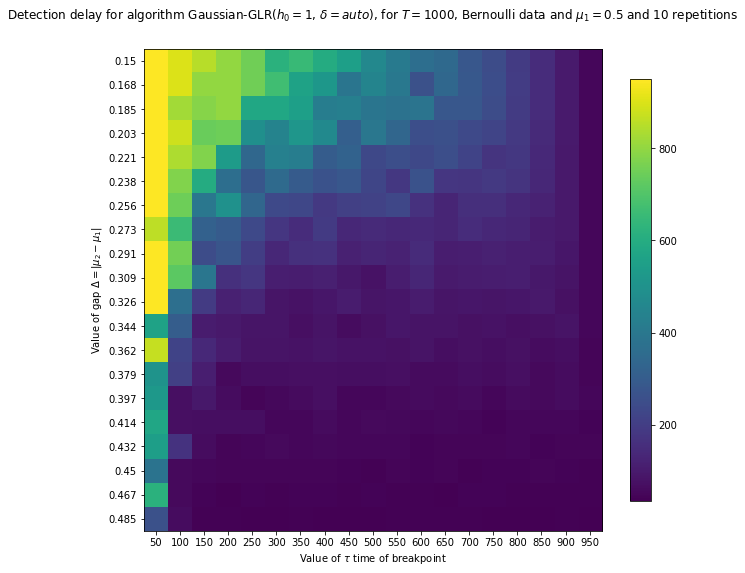

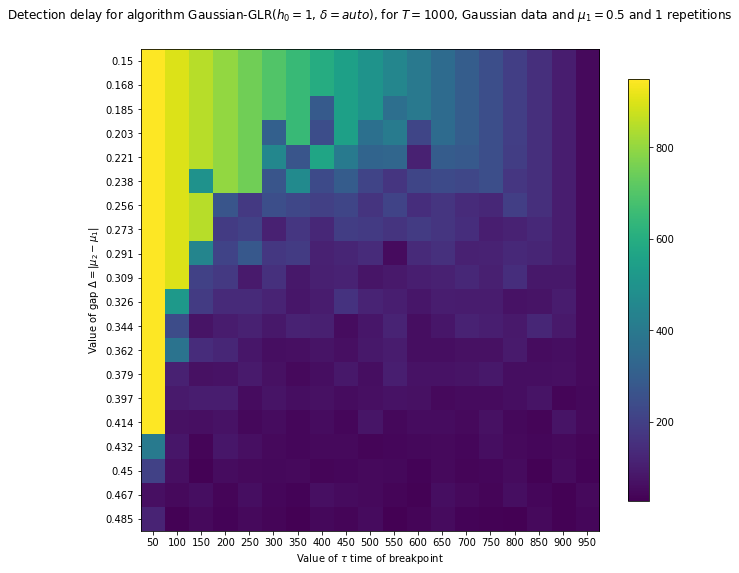

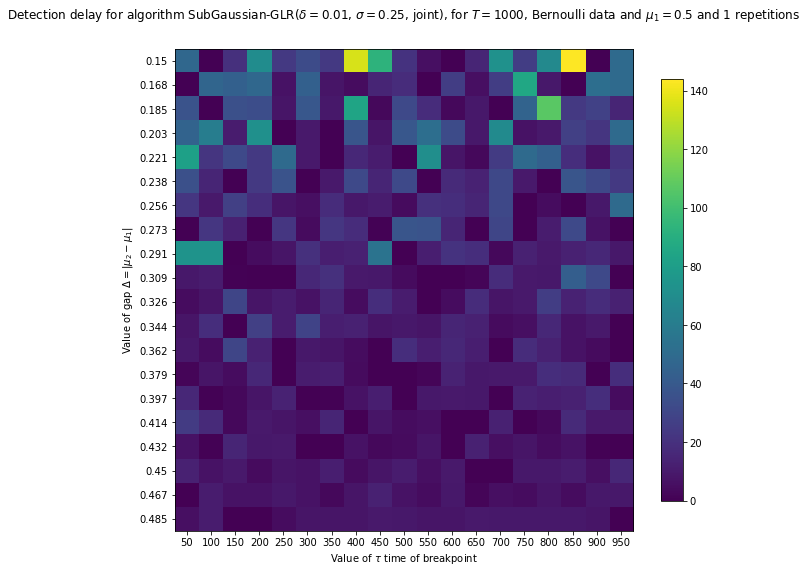

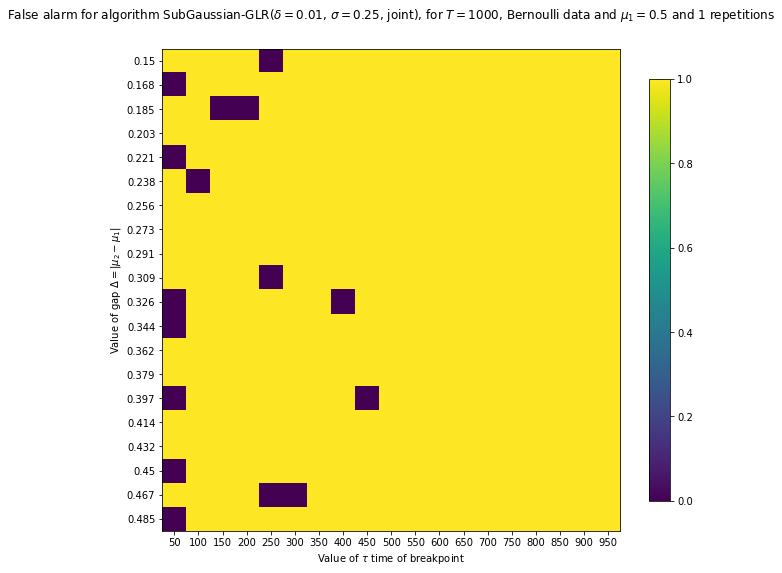

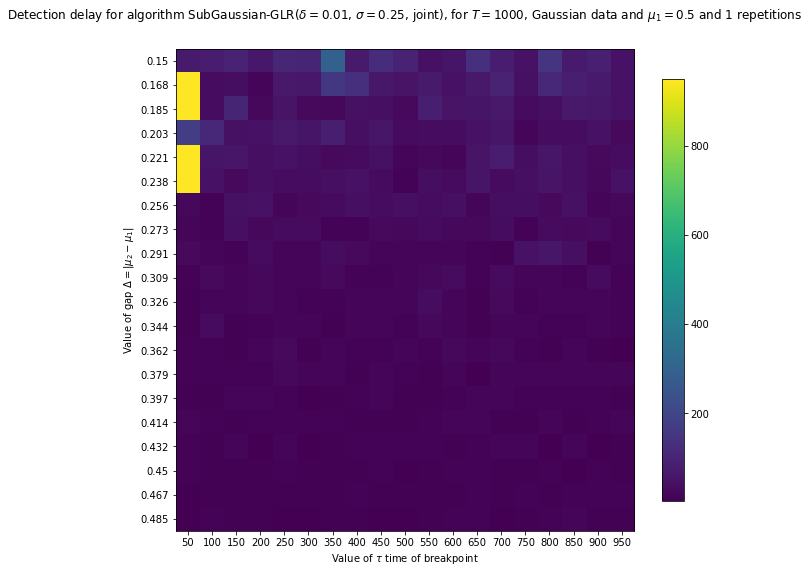

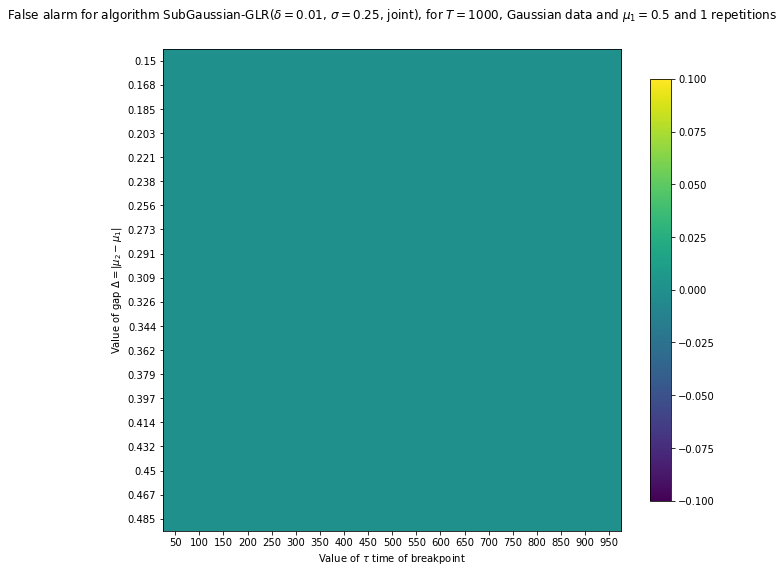

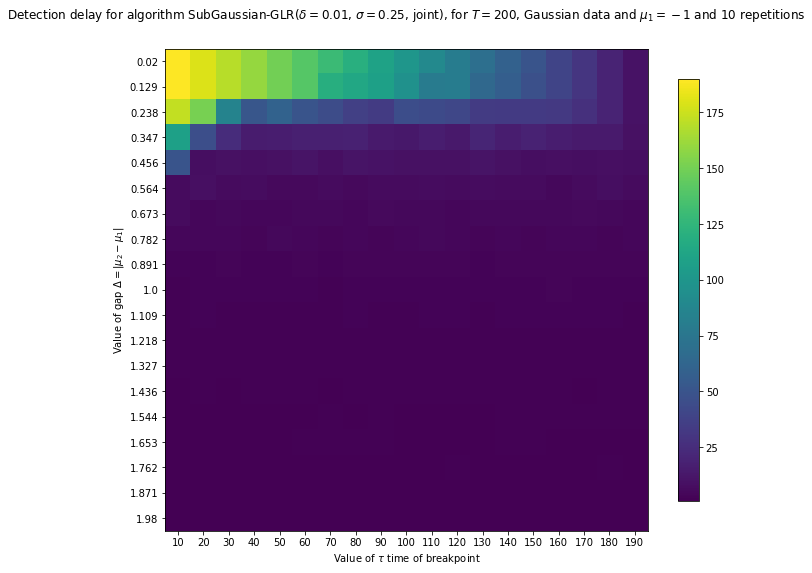

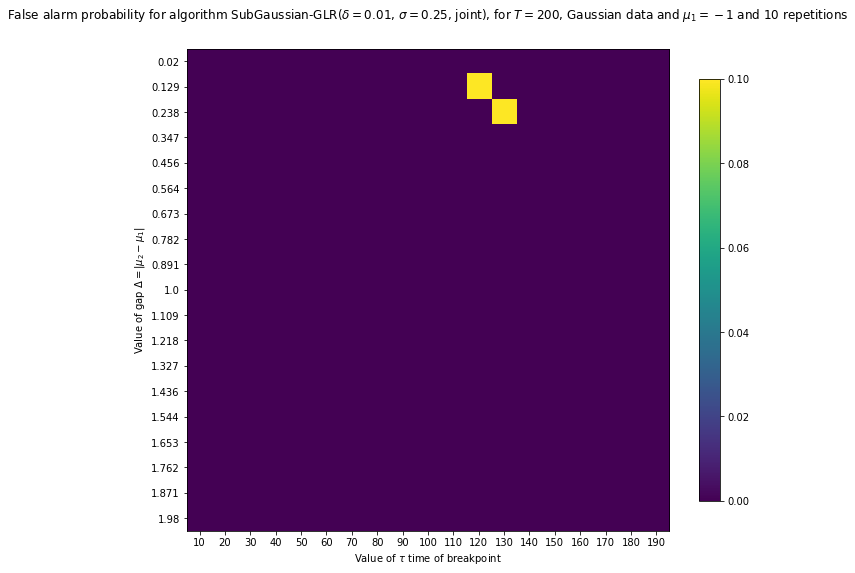

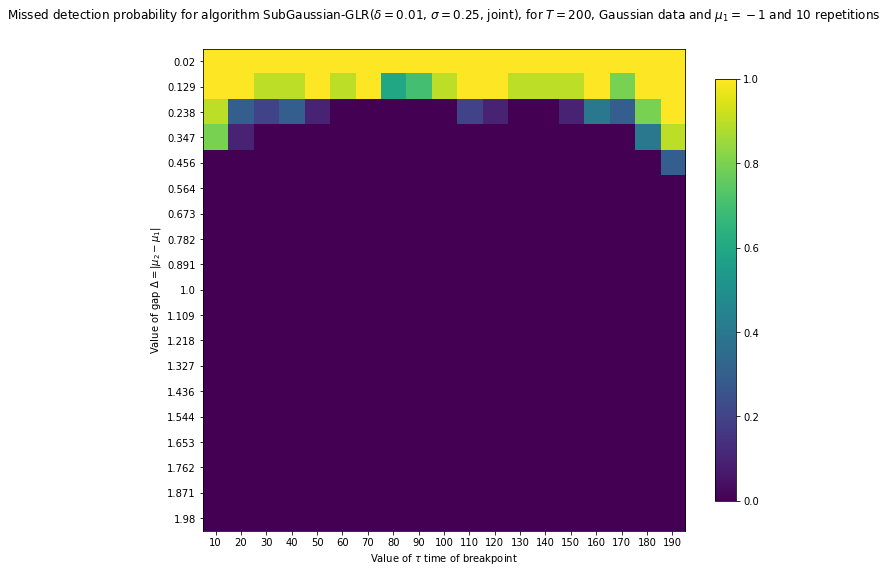

4.4.2.7 For Sub-Gaussian GLR

5 Exploring the parameters of change point detection algorithms: how to tune them?

5.1 A simple problem function

5.2 A generic function

5.3 Plotting the result as a 1D plot

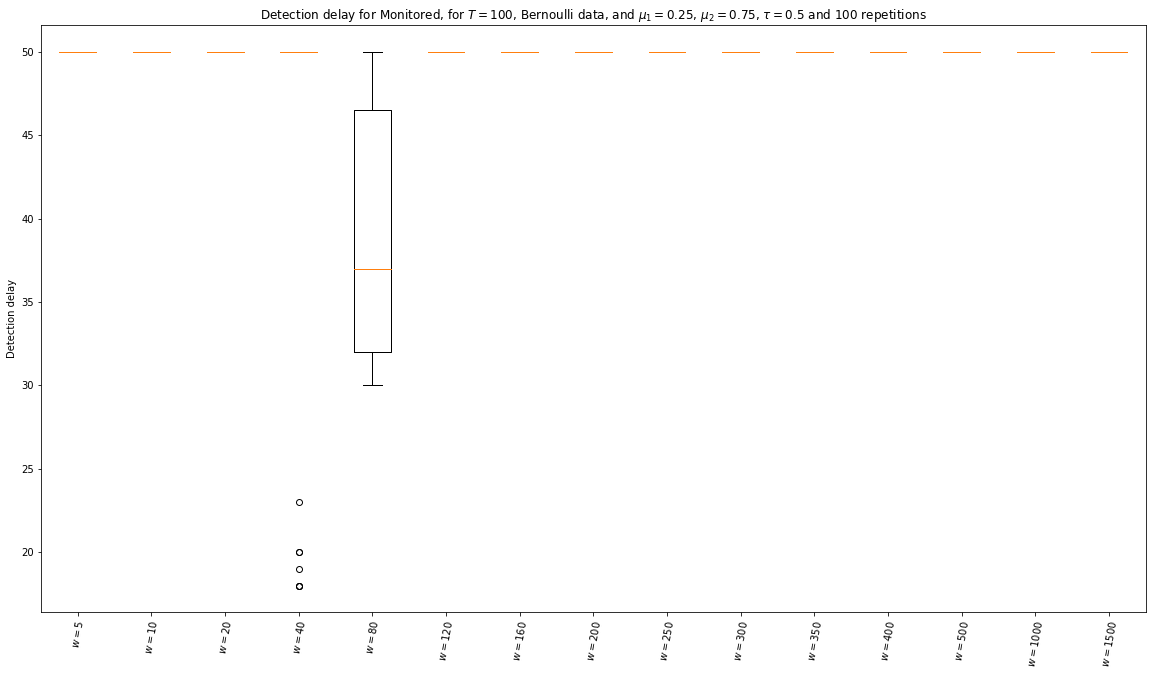

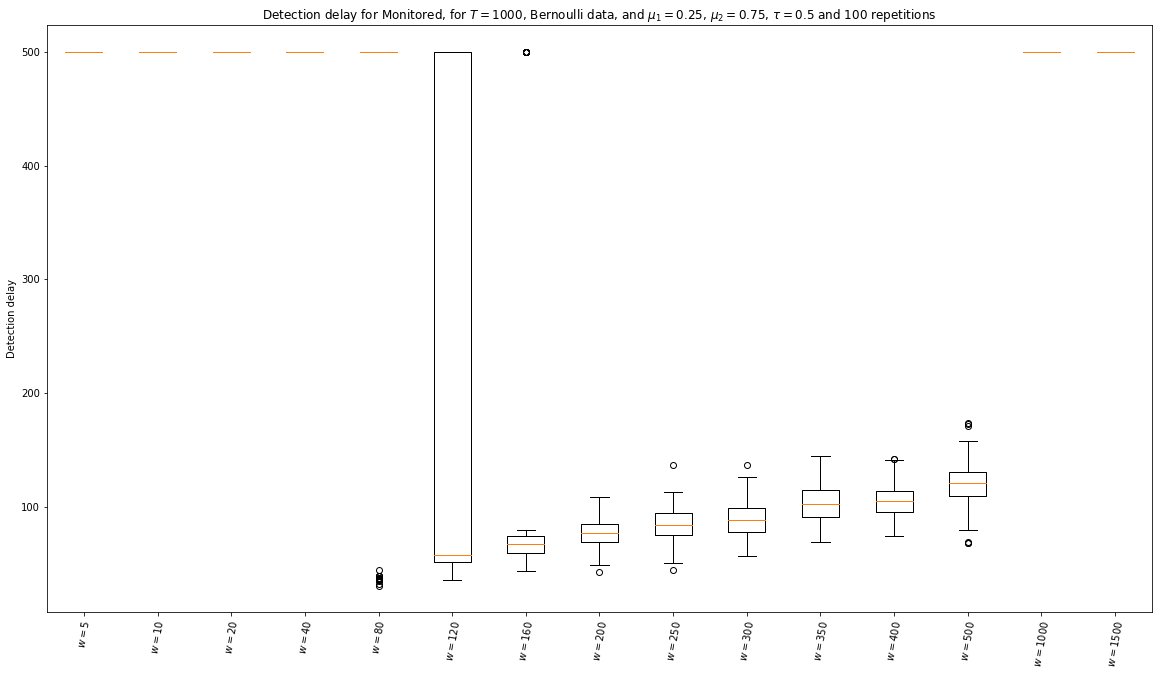

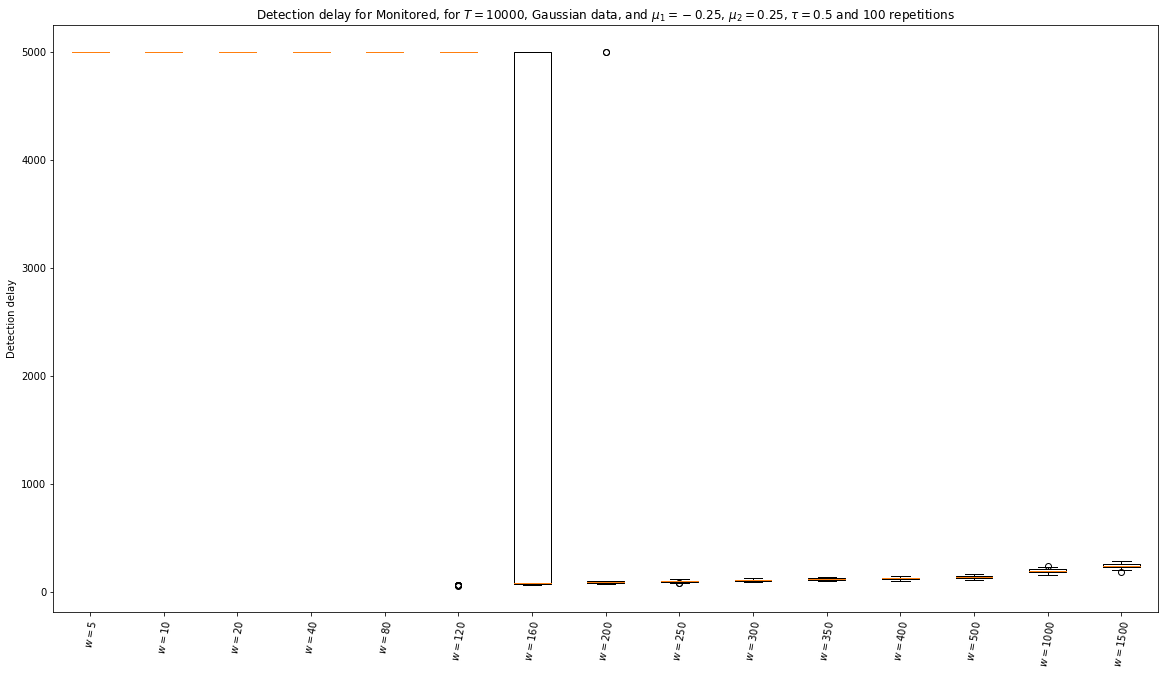

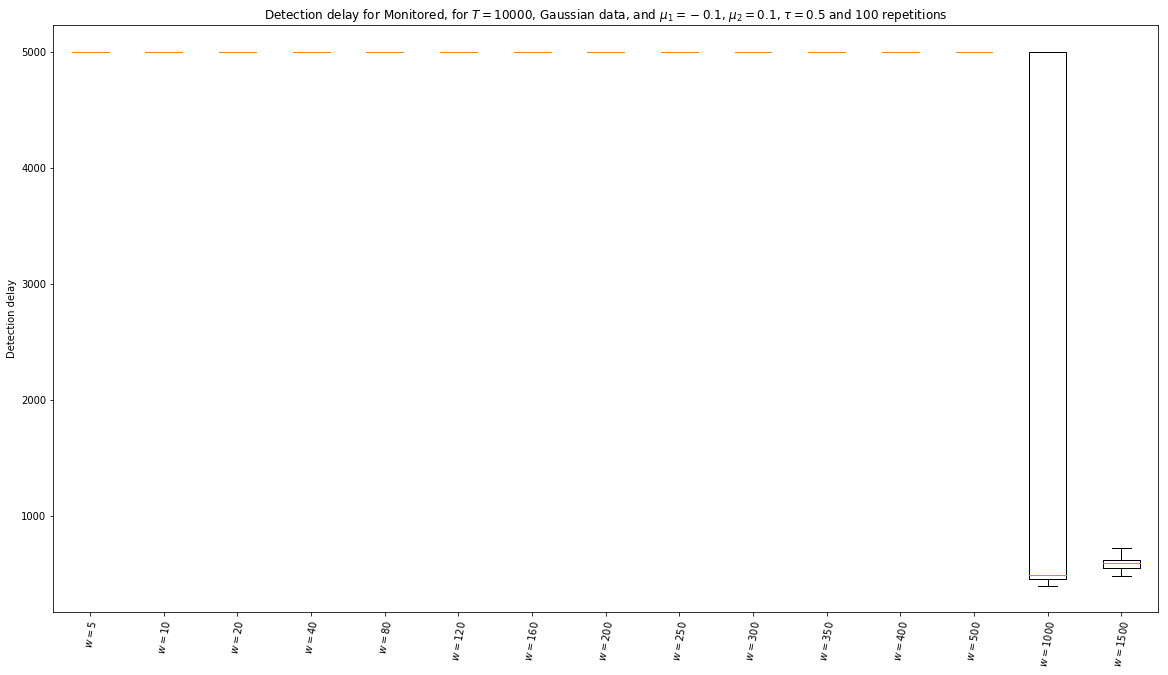

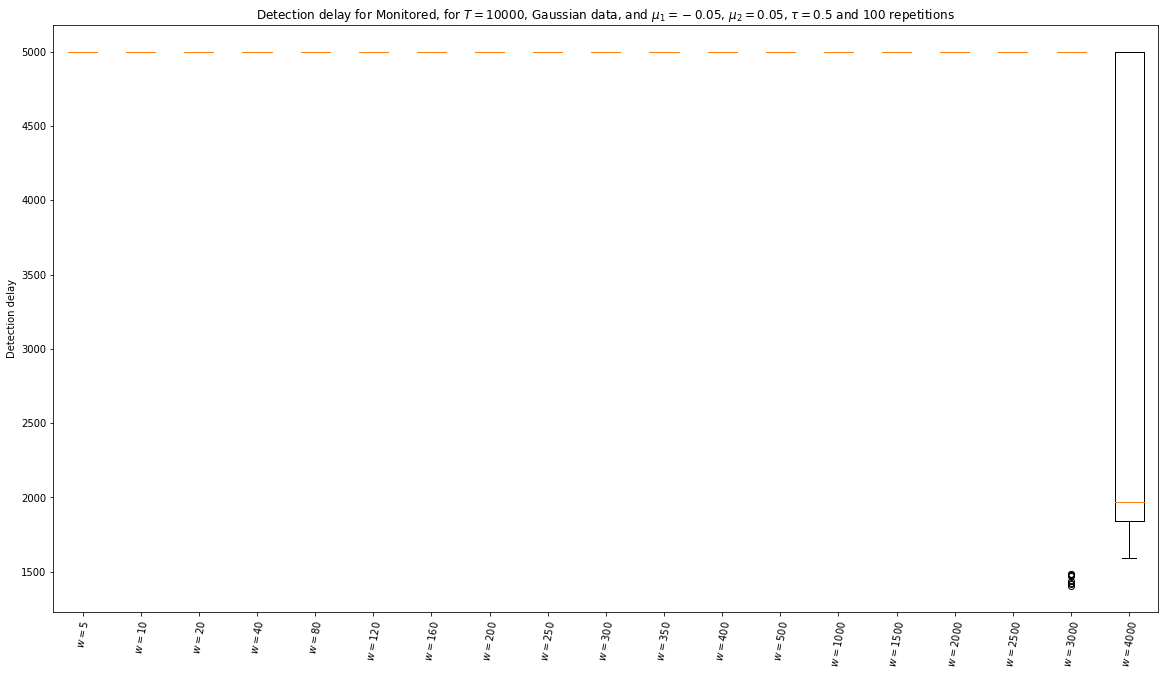

5.4 Experiments for Monitored

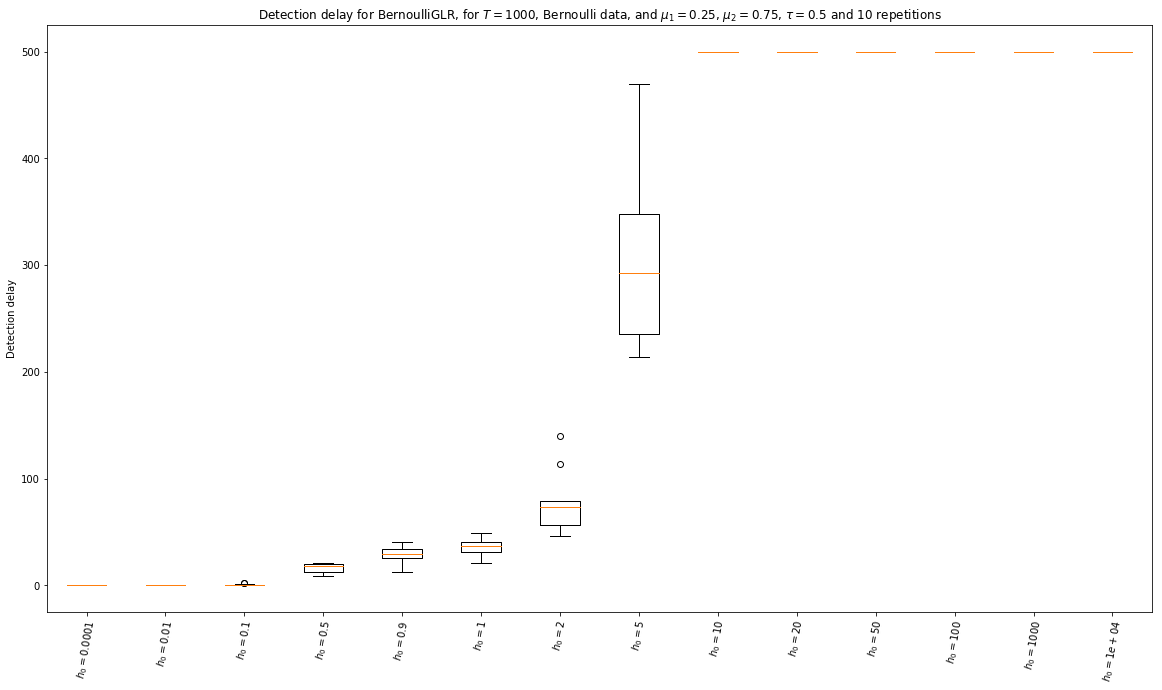

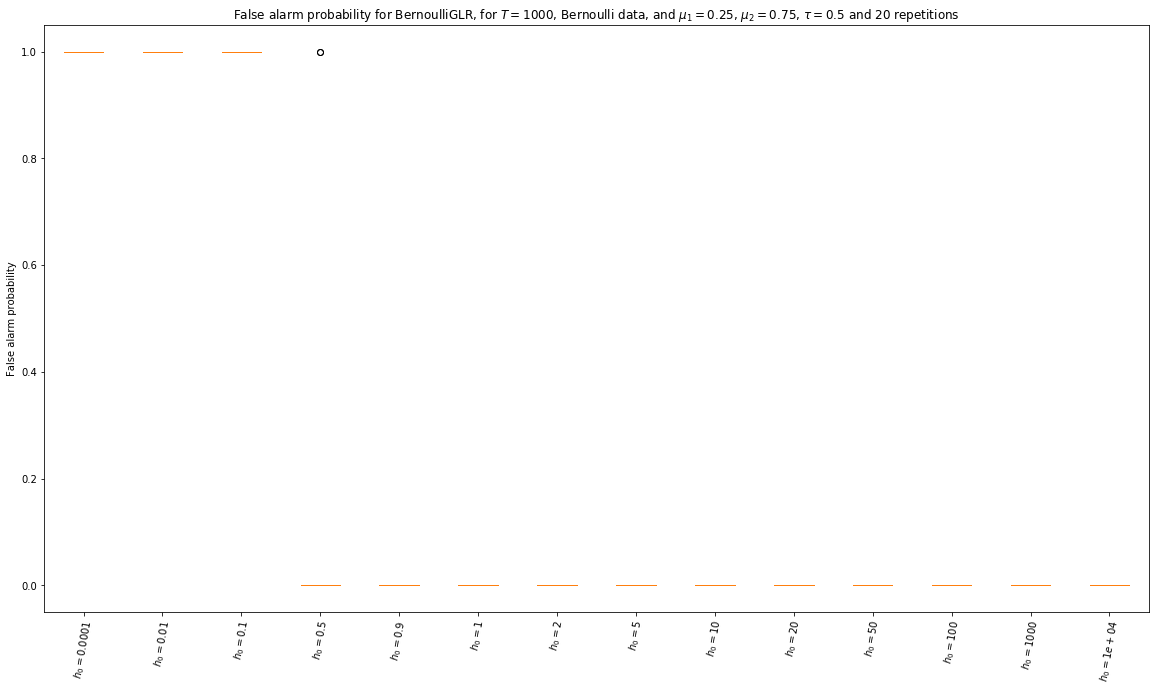

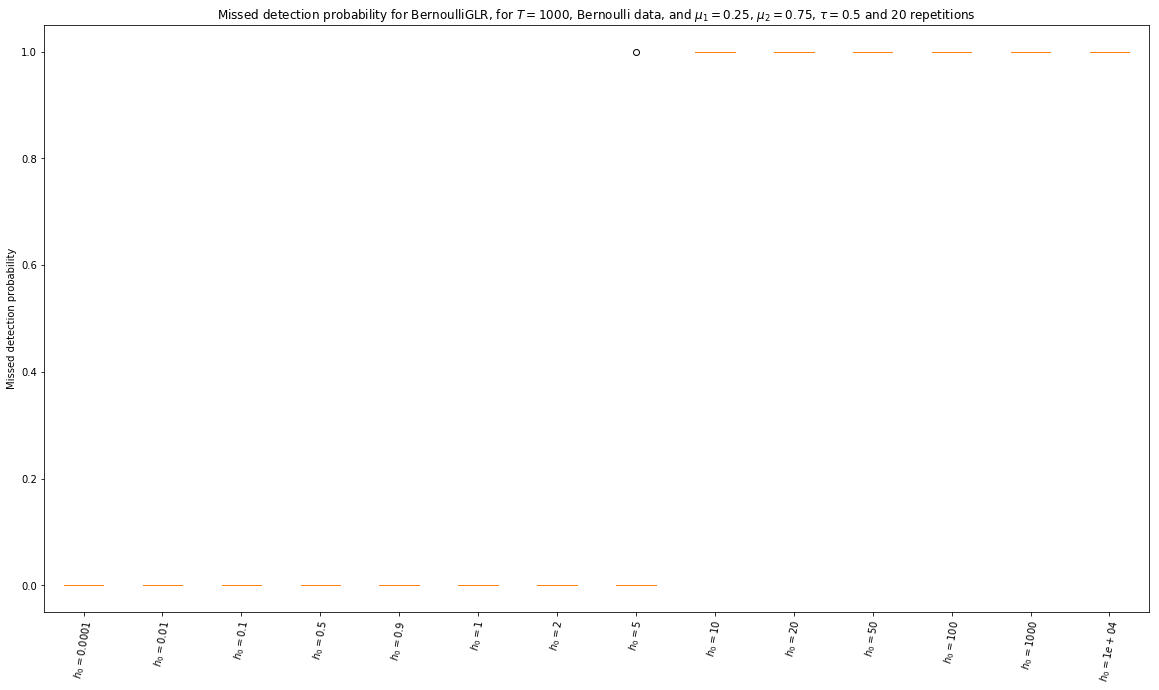

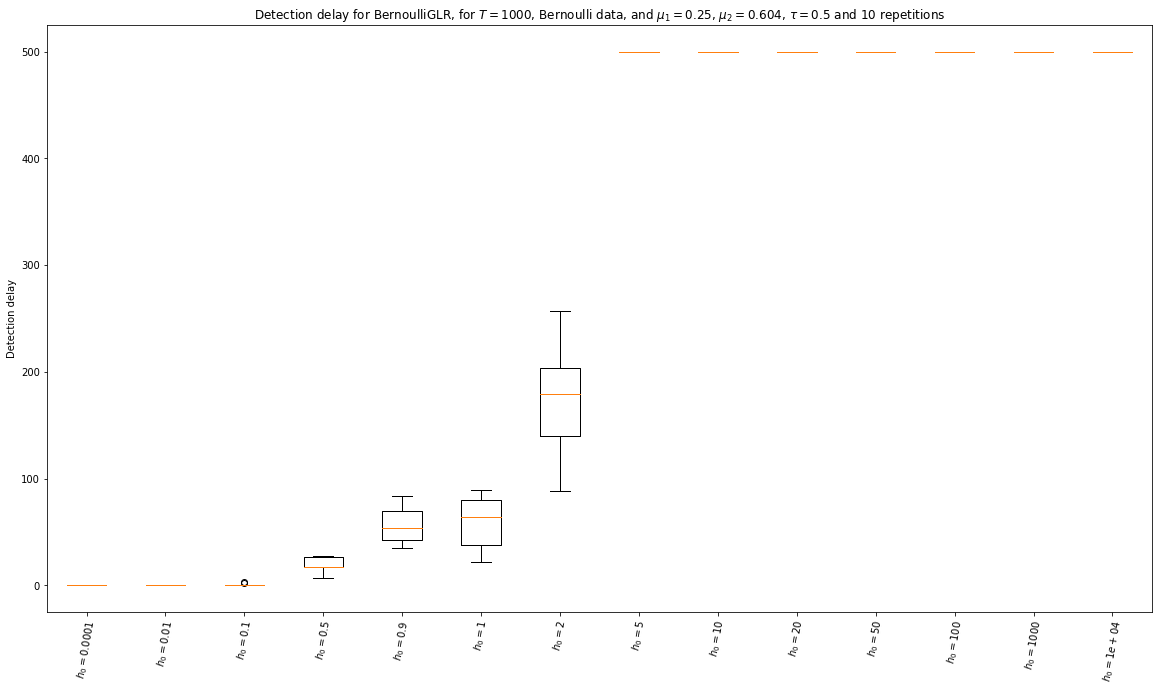

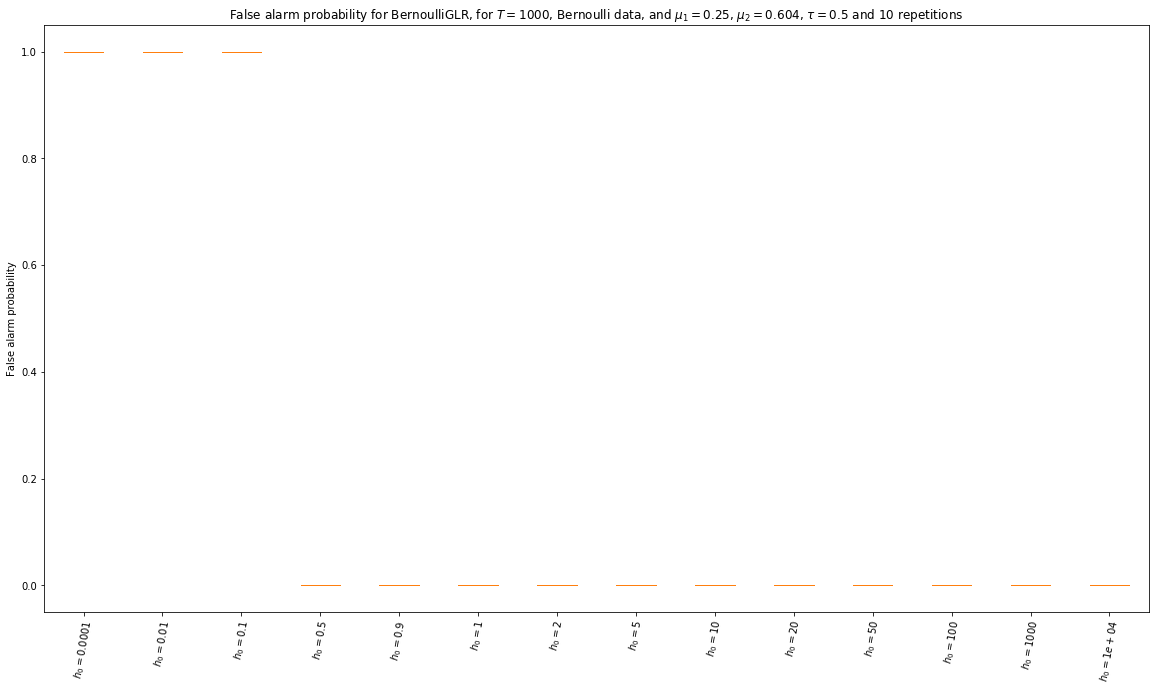

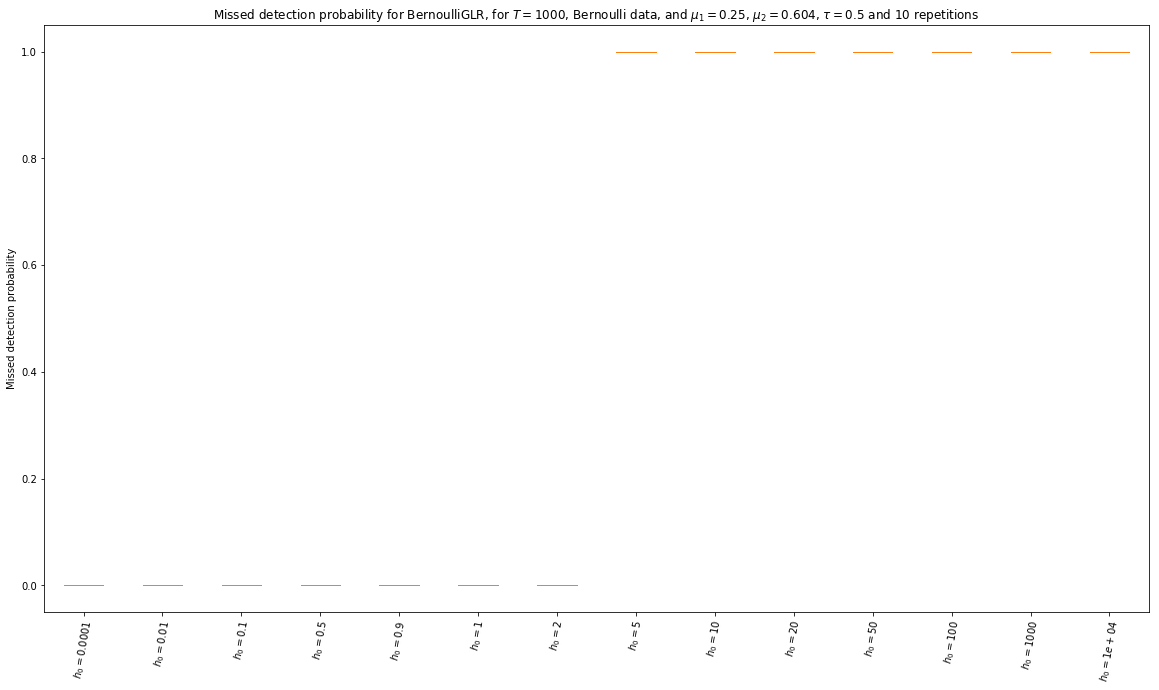

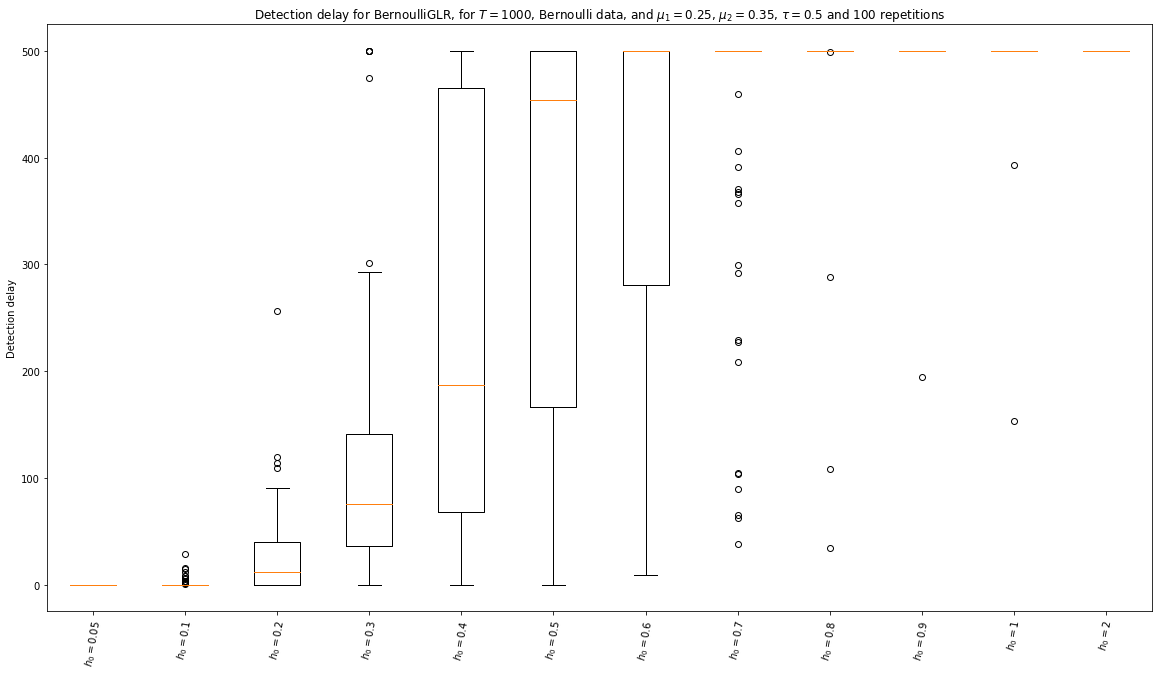

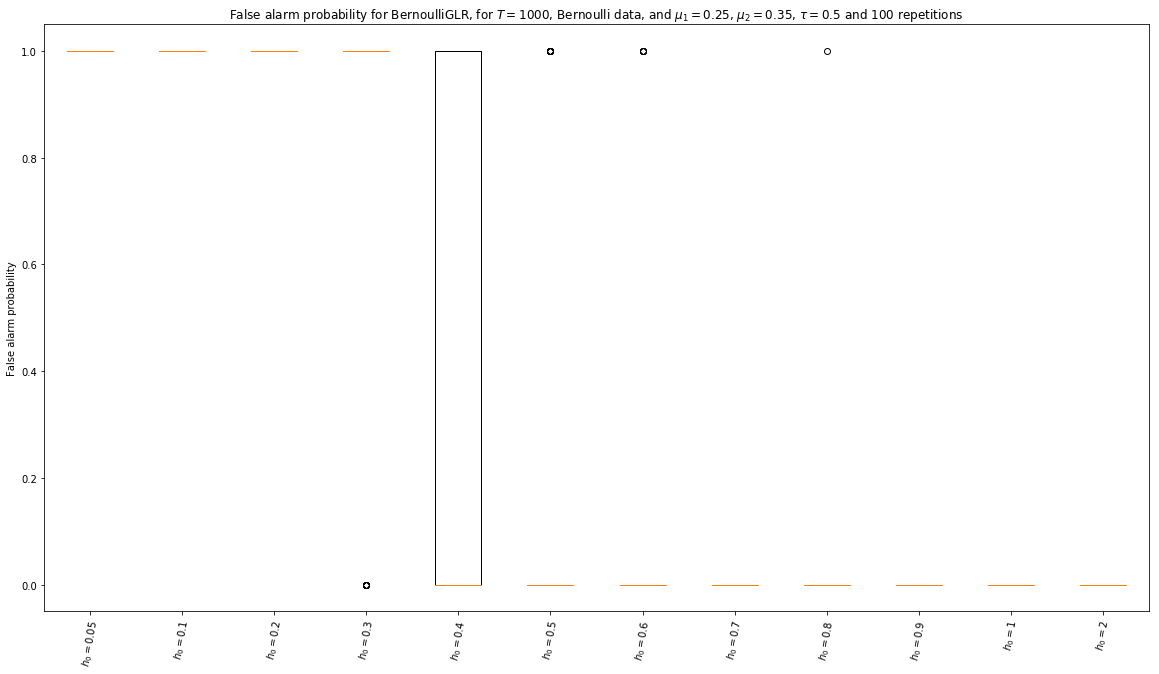

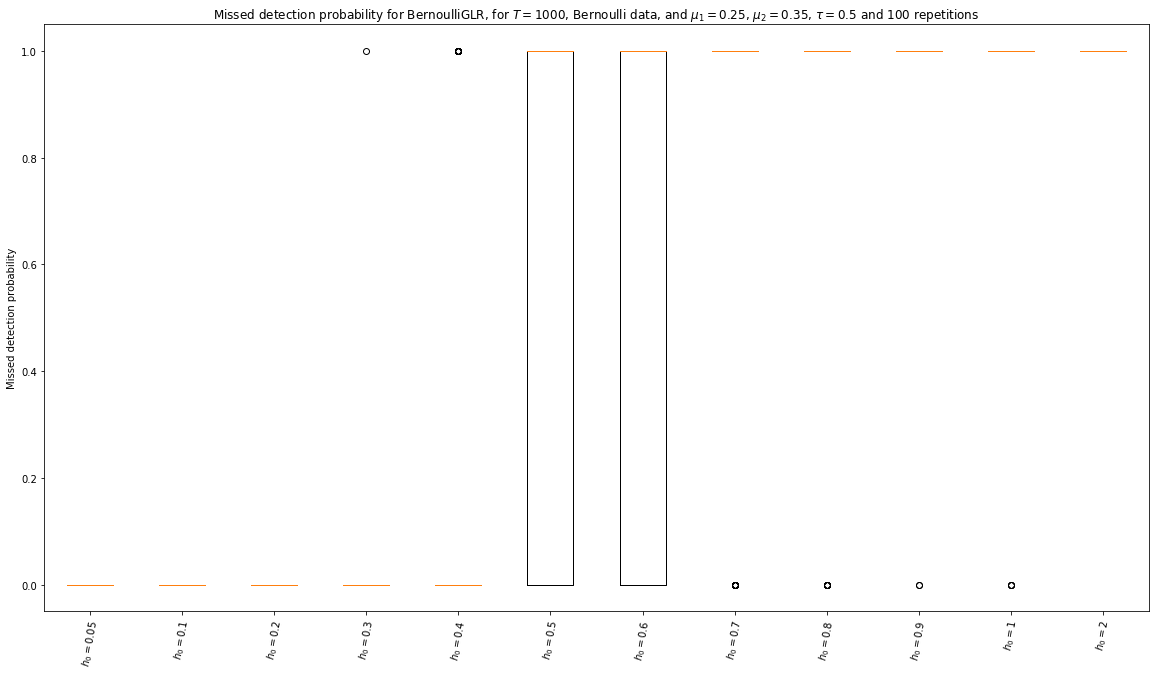

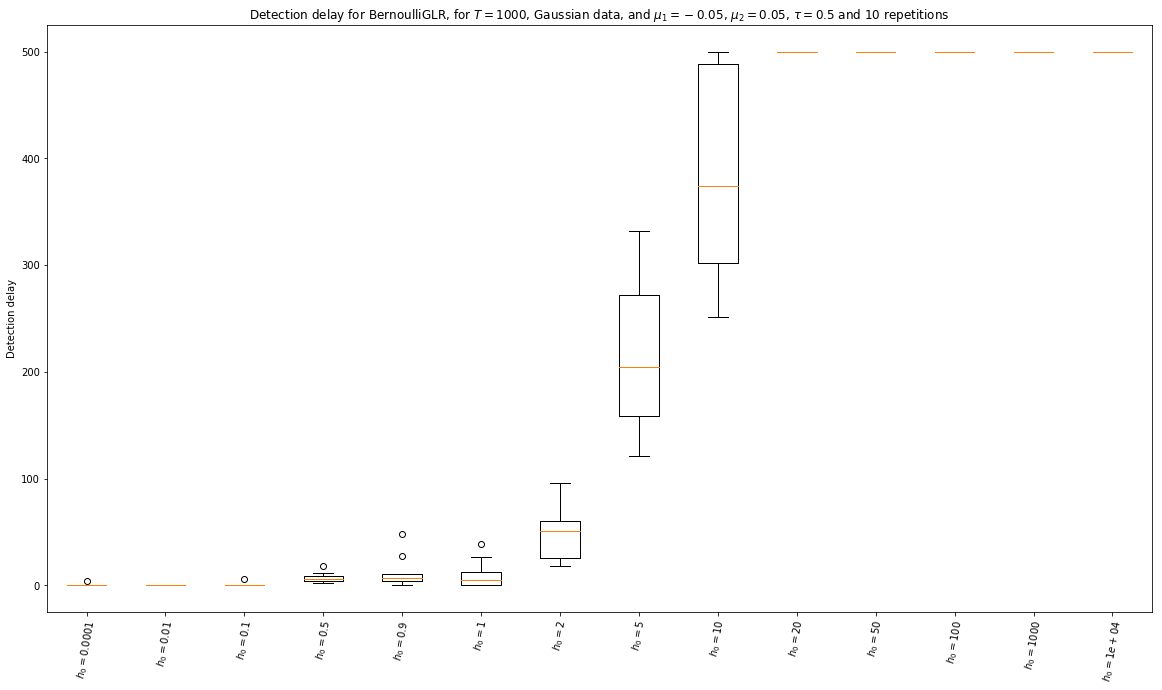

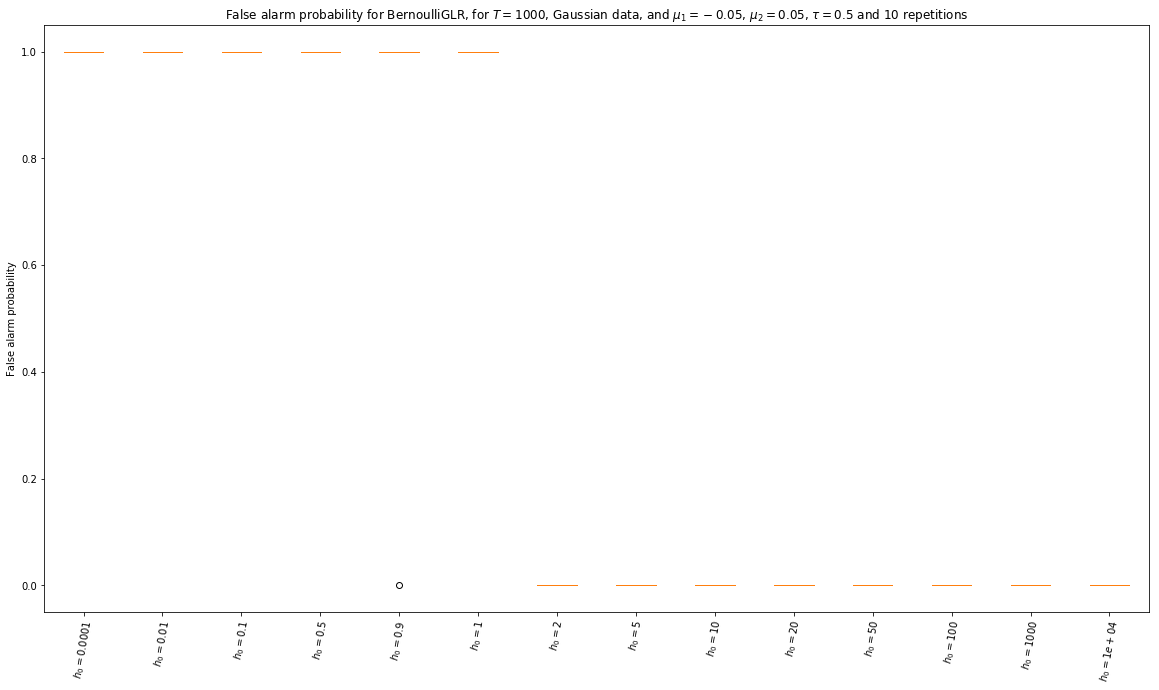

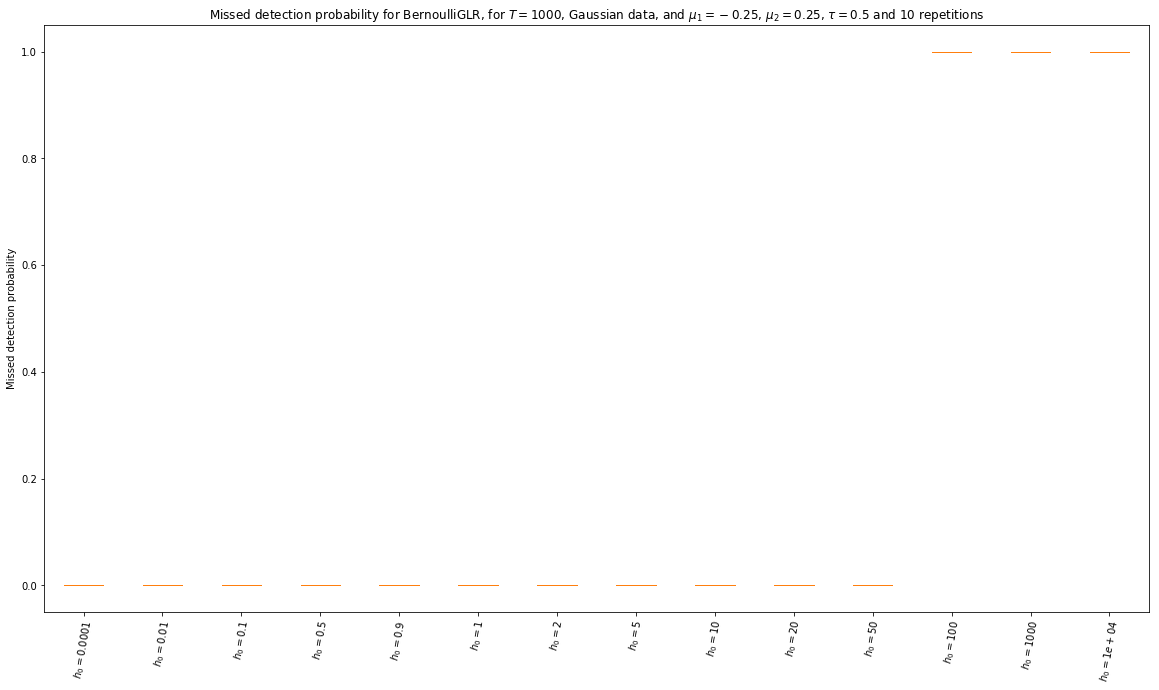

5.5 Experiments for Bernoulli GLR

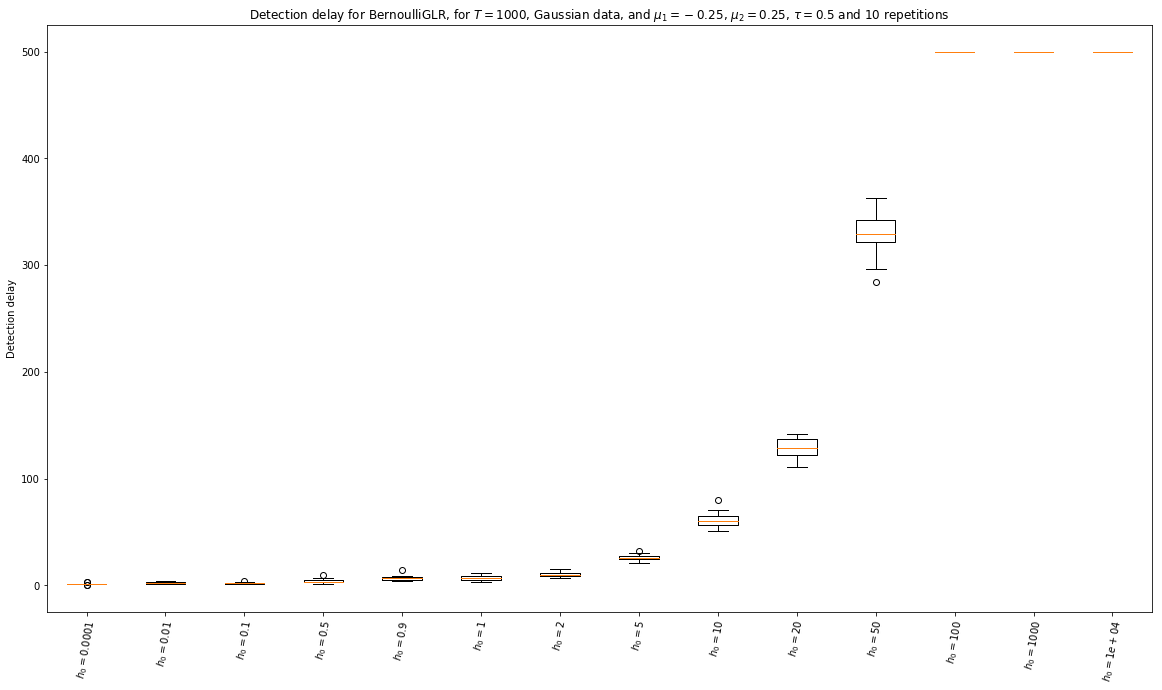

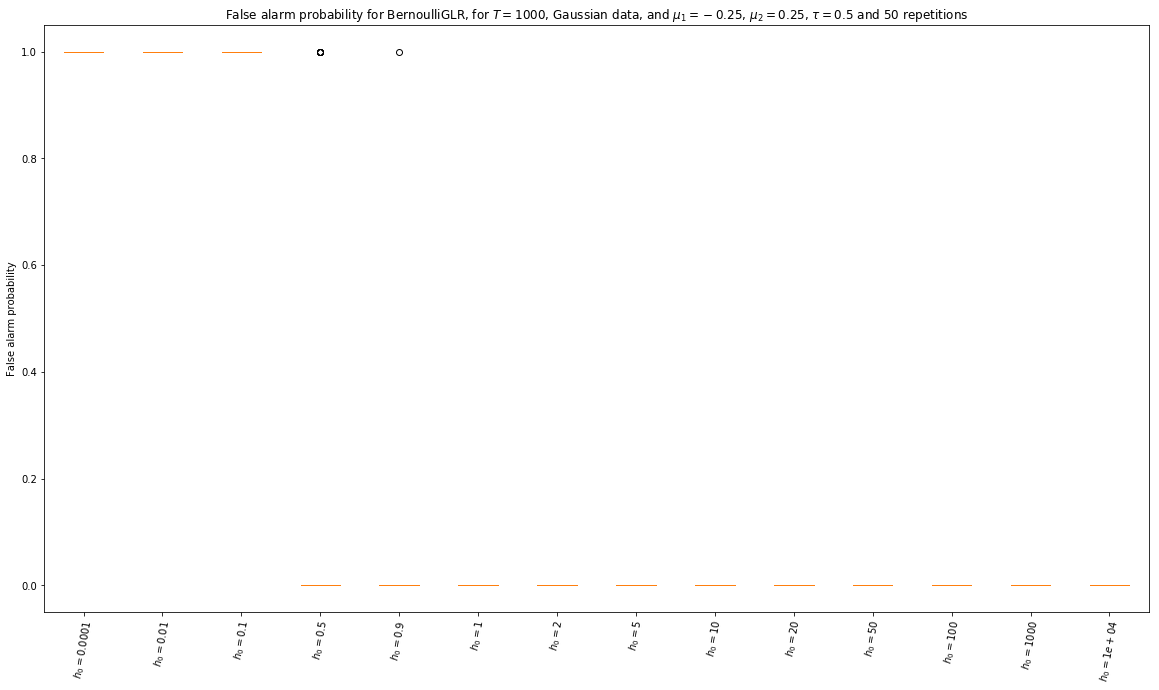

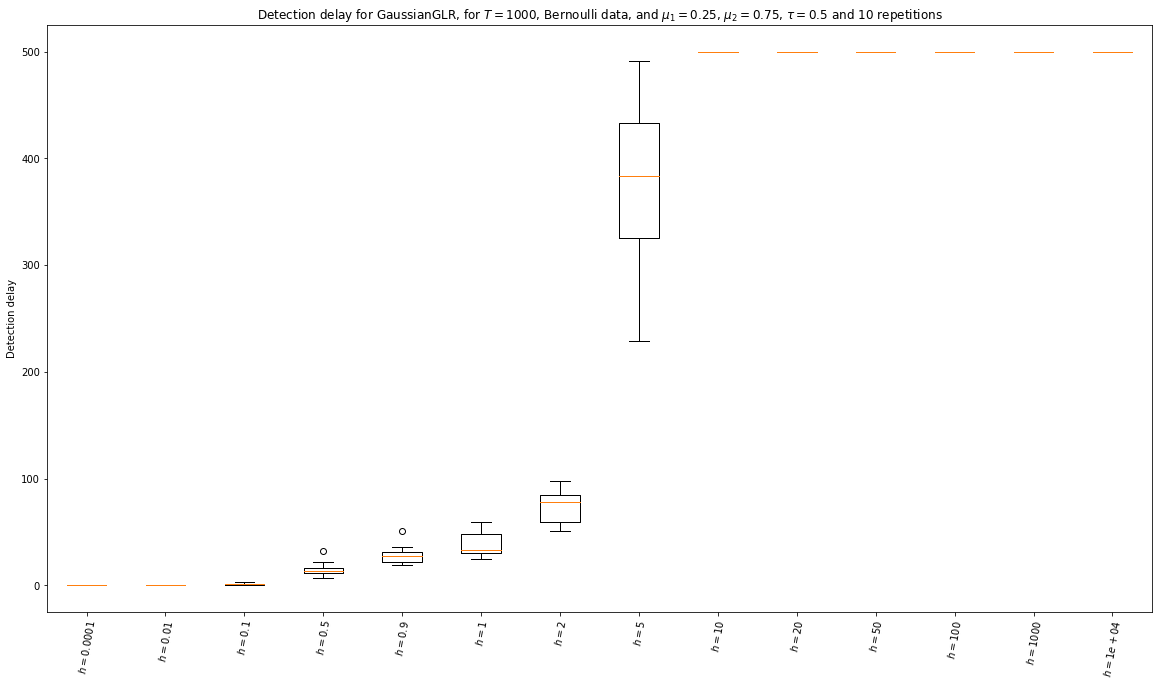

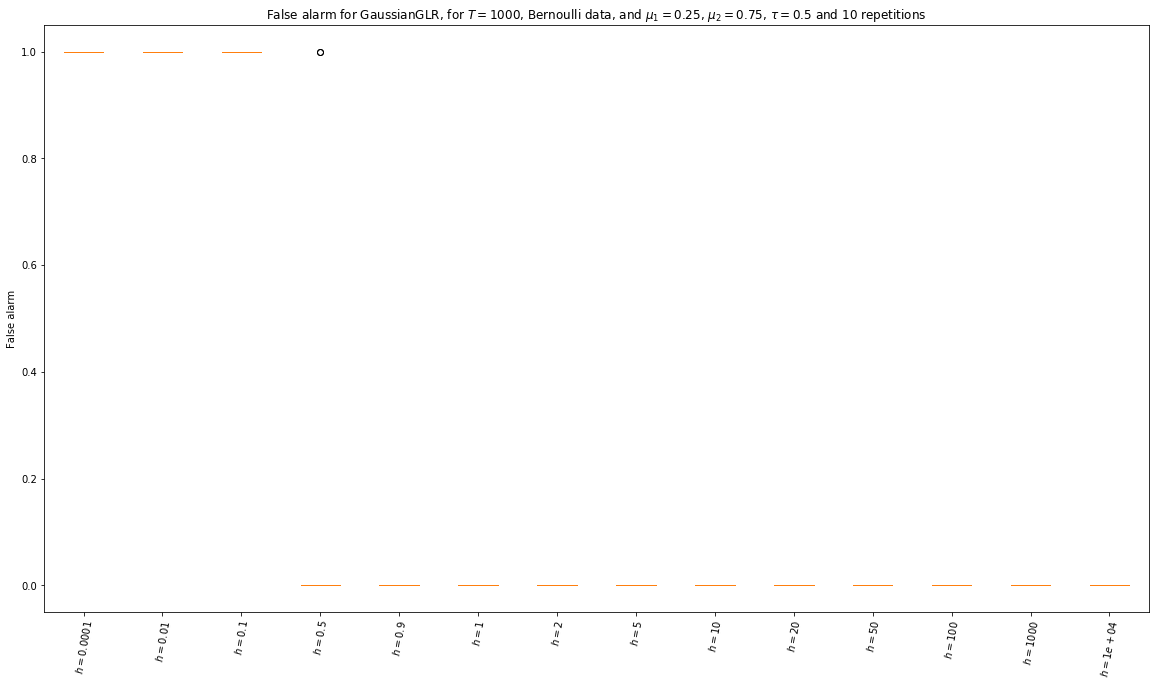

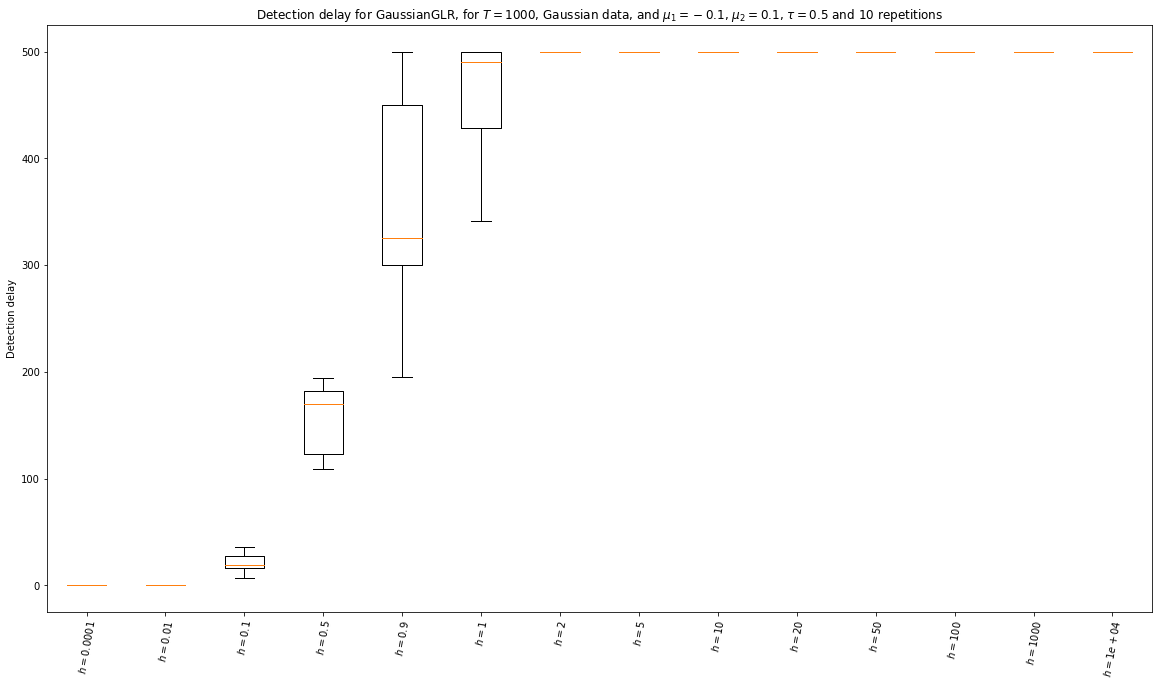

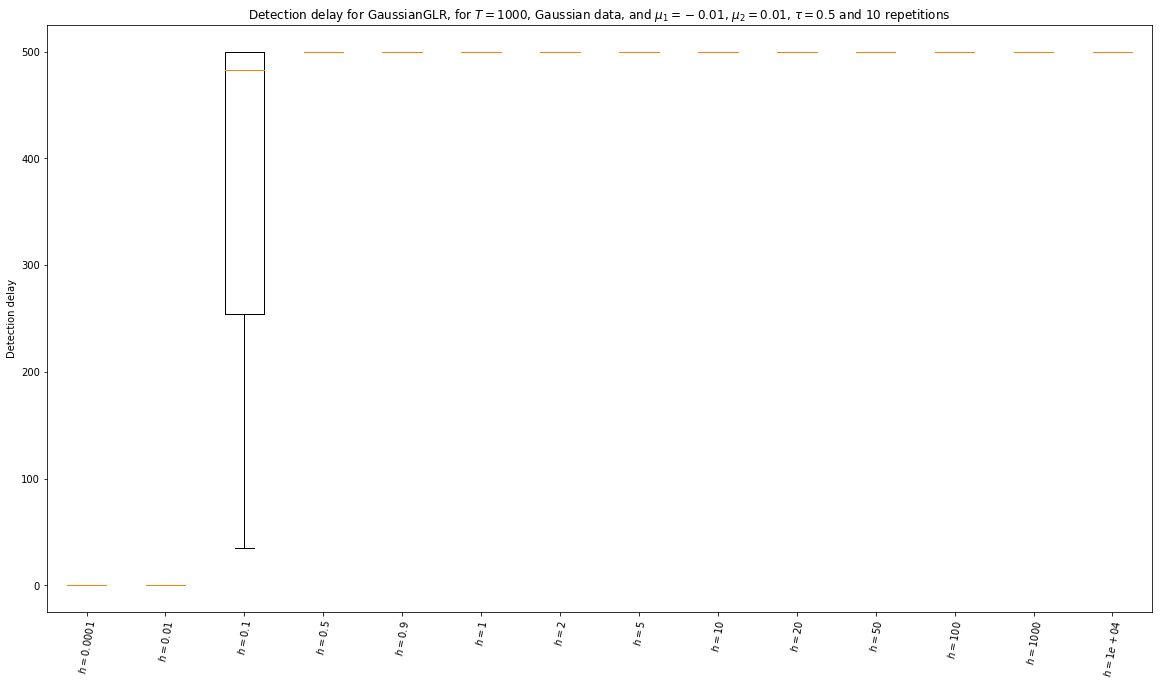

5.6 Experiments for Gaussian GLR

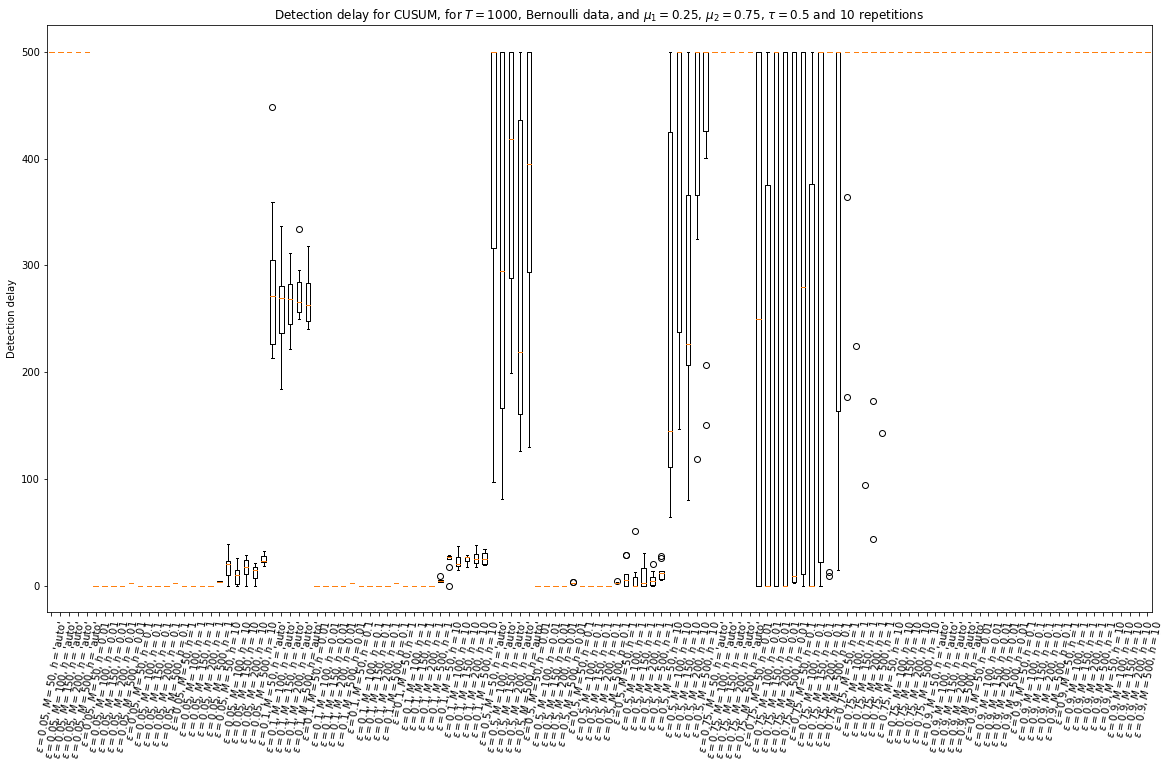

5.7 Experiments for CUSUM

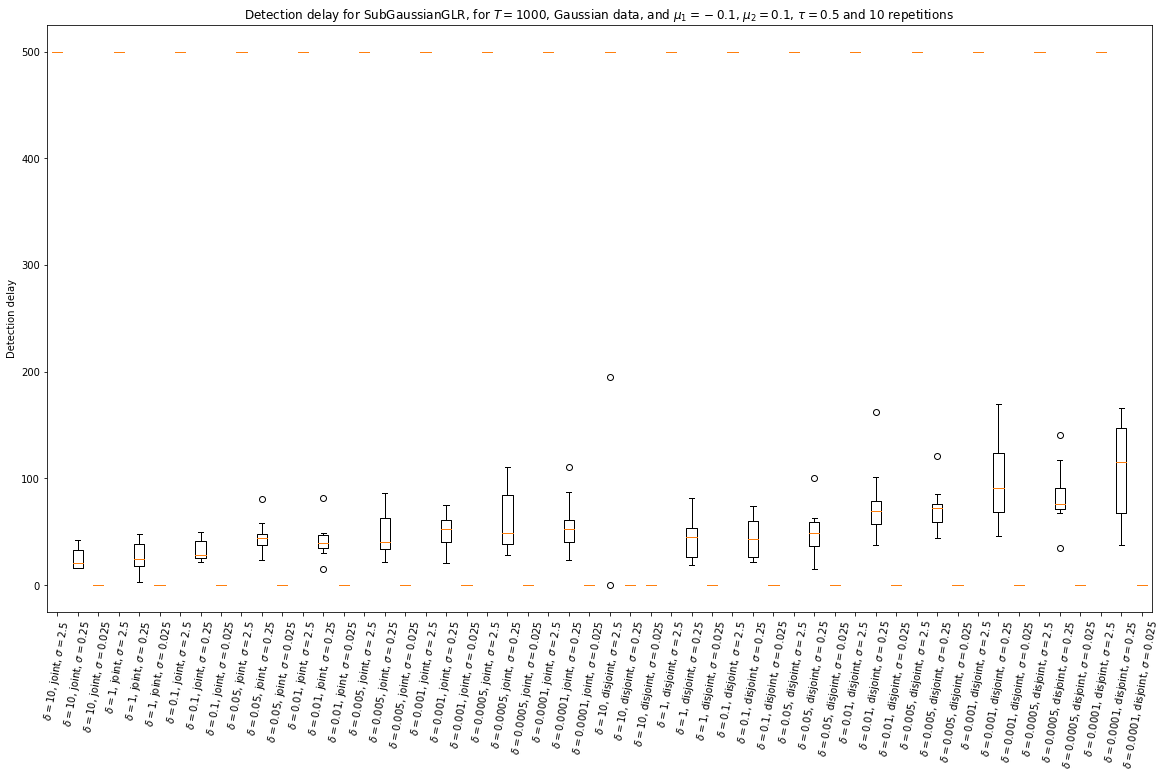

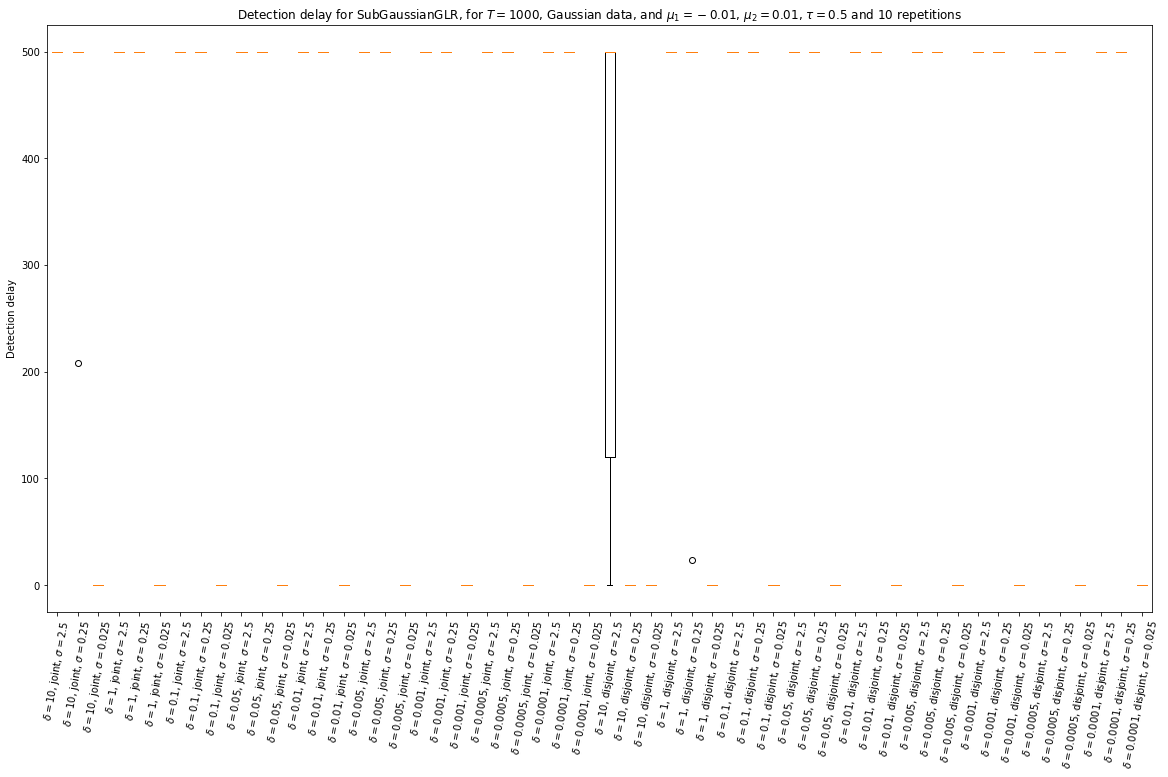

5.8 Experiments for Sub-Gaussian GLR

5.9 Other experiments

6 Conclusions

Requirements and helper functions¶

Requirements¶

This notebook requires to have `numpy <https://www.numpy.org/>`__ and `matplotlib <https://matplotlib.org/>`__ installed. One function needs a function from `scipy.special <https://docs.scipy.org/doc/scipy/reference/generated/scipy.special.comb.html#scipy.special.comb>`__. `joblib <https://joblib.readthedocs.io/en/latest/>`__ is used to have parallel computations (at the end).

The bottleneck performance of the main functions are very simple functions, for which we can write efficient versions using either `numba.jit <https://numba.pydata.org/numba-doc/latest/reference/jit-compilation.html#numba.jit>`__ or `cython <https://cython.readthedocs.io/en/latest/src/quickstart/build.html#jupyter-notebook>`__.

[3]:

!pip3 install watermark numpy scipy matplotlib joblib numba cython

%load_ext watermark

%watermark -v -m -p numpy,scipy,matplotlib,joblib,numba,cython -a "Lilian Besson and Emilie Kaufmann"

Requirement already satisfied: watermark in /usr/local/lib/python3.6/dist-packages (1.5.0)

Requirement already satisfied: numpy in /usr/local/lib/python3.6/dist-packages (1.15.4)

Requirement already satisfied: scipy in /usr/local/lib/python3.6/dist-packages (1.1.0)

Requirement already satisfied: matplotlib in /usr/local/lib/python3.6/dist-packages (3.0.2)

Requirement already satisfied: joblib in /usr/local/lib/python3.6/dist-packages (0.12.1)

Requirement already satisfied: numba in /usr/local/lib/python3.6/dist-packages (0.41.0)

Requirement already satisfied: cython in /usr/local/lib/python3.6/dist-packages (0.29.1)

Requirement already satisfied: ipython in /usr/local/lib/python3.6/dist-packages (from watermark) (7.2.0)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.6/dist-packages (from matplotlib) (0.10.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib) (1.0.1)

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib) (2.3.0)

Requirement already satisfied: python-dateutil>=2.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib) (2.7.3)

Requirement already satisfied: llvmlite>=0.26.0dev0 in /usr/local/lib/python3.6/dist-packages (from numba) (0.26.0)

Requirement already satisfied: prompt-toolkit<2.1.0,>=2.0.0 in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (2.0.7)

Requirement already satisfied: jedi>=0.10 in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (0.12.1)

Requirement already satisfied: pygments in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (2.2.0)

Requirement already satisfied: decorator in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (4.3.0)

Requirement already satisfied: setuptools>=18.5 in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (40.5.0)

Requirement already satisfied: traitlets>=4.2 in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (4.3.2)

Requirement already satisfied: pickleshare in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (0.7.5)

Requirement already satisfied: pexpect; sys_platform != "win32" in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (4.6.0)

Requirement already satisfied: backcall in /usr/local/lib/python3.6/dist-packages (from ipython->watermark) (0.1.0)

Requirement already satisfied: six in /home/lilian/.local/lib/python3.6/site-packages (from cycler>=0.10->matplotlib) (1.11.0)

Requirement already satisfied: wcwidth in /usr/local/lib/python3.6/dist-packages (from prompt-toolkit<2.1.0,>=2.0.0->ipython->watermark) (0.1.7)

Requirement already satisfied: parso>=0.3.0 in /usr/local/lib/python3.6/dist-packages (from jedi>=0.10->ipython->watermark) (0.3.1)

Requirement already satisfied: ipython-genutils in /usr/local/lib/python3.6/dist-packages (from traitlets>=4.2->ipython->watermark) (0.2.0)

Requirement already satisfied: ptyprocess>=0.5 in /usr/local/lib/python3.6/dist-packages (from pexpect; sys_platform != "win32"->ipython->watermark) (0.6.0)

The watermark extension is already loaded. To reload it, use:

%reload_ext watermark

Lilian Besson and Emilie Kaufmann

CPython 3.6.7

IPython 7.2.0

numpy 1.15.4

scipy 1.1.0

matplotlib 3.0.2

joblib 0.12.1

numba 0.41.0

cython 0.29.1

compiler : GCC 8.2.0

system : Linux

release : 4.15.0-42-generic

machine : x86_64

processor : x86_64

CPU cores : 4

interpreter: 64bit

[4]:

import numpy as np

[5]:

try:

from tqdm import tqdm_notebook as tqdm

except:

def tqdm(iterator, *args, **kwargs):

return iterator

Mathematical notations for stationary problems¶

We consider \(K \geq 1\) arms, which are distributions \(\nu_k\). We focus on Bernoulli distributions, which are characterized by their means, \(\nu_k = \mathcal{B}(\mu_k)\) for \(\mu_k\in[0,1]\). A stationary bandit problem is defined here by the vector \([\mu_1,\dots,\mu_K]\).

For a fixed problem and a horizon \(T\in\mathbb{N}\), \(T\geq1\), we draw samples from the \(K\) distributions to get data: \(\forall t, r_k(t) \sim \nu_k\), ie, \(\mathbb{P}(r_k(t) = 1) = \mu_k\) and \(r_k(t) \in \{0,1\}\).

Generating stationary data¶

Here we give some examples of stationary problems and examples of data we can draw from them.

[13]:

def bernoulli_samples(means, horizon=1000):

if np.size(means) == 1:

return np.random.binomial(1, means, size=horizon)

else:

results = np.zeros((np.size(means), horizon))

for i, mean in enumerate(means):

results[i] = np.random.binomial(1, mean, size=horizon)

return results

[14]:

problem1 = [0.5]

bernoulli_samples(problem1, horizon=20)

[14]:

array([0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0])

[27]:

%timeit bernoulli_samples(problem1, horizon=1000)

61.5 µs ± 2.59 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

Now for Gaussian data:

[49]:

sigma = 0.25 # Bernoulli are 1/4-sub Gaussian too!

[50]:

def gaussian_samples(means, horizon=1000, sigma=sigma):

if np.size(means) == 1:

return np.random.normal(loc=means, scale=sigma, size=horizon)

else:

results = np.zeros((np.size(means), horizon))

for i, mean in enumerate(means):

results[i] = np.random.normal(loc=mean, scale=sigma, size=horizon)

return results

[51]:

gaussian_samples(problem1, horizon=20)

[51]:

array([ 0.62387502, 0.25629644, 0.67019174, 0.17045626, 0.64599757,

0.37851622, 0.7365035 , 0.59688851, 0.63485378, 0.59576263,

0.27670489, 0.69860233, 0.49817269, 0.26672791, -0.05480486,

0.43916024, 0.4352945 , 0.630749 , -0.11451167, 0.11158323])

[52]:

%timeit gaussian_samples(problem1, horizon=1000)

75.8 µs ± 4.3 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

For bandit problem with \(K \geq 2\) arms, the goal is to design an online learning algorithm that roughly do the following:

For time \(t=1\) to \(t=T\) (unknown horizon)

Algorithm \(A\) decide to draw arm \(A(t) \in\{1,\dots,K\}\),

Get the reward \(r(t) = r_{A(t)}(t) \sim \nu_{A(t)}\) from the (Bernoulli) distribution of that arm,

Give this observation of reward \(r(t)\) coming from arm \(A(t)\) to the algorithm,

Update internal state of the algorithm

An algorithm is efficient if it obtains a high (expected) sum reward, ie, \(\sum_{t=1}^T r(t)\).

Note that I don’t focus on bandit algorithm here.

[9]:

problem2 = [0.1, 0.5, 0.9]

bernoulli_samples(problem2, horizon=20)

[9]:

array([[0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0.],

[1., 1., 0., 1., 0., 0., 1., 0., 1., 0., 1., 1., 1., 0., 0., 1.,

0., 0., 1., 0.],

[1., 0., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

0., 1., 1., 1.]])

[10]:

problem2 = [0.1, 0.5, 0.9]

gaussian_samples(problem2, horizon=20)

[10]:

array([[ 0.46905853, 0.08312363, -0.23166553, 0.59750825, 0.23356612,

-0.02132874, -0.26851831, 0.18202564, 0.37409142, -0.14397223,

-0.22241626, -0.18888125, -0.13127815, 0.2314255 , 0.49433066,

0.39645143, -0.11529154, -0.13321403, 0.34457873, -0.16463127],

[ 0.09801182, 0.8898005 , 0.76216447, 0.72837837, 0.25624864,

0.71225959, 0.56964125, 0.6309913 , 1.06315774, 0.28064027,

0.2251329 , 0.61036472, 0.43995415, 0.57338936, 0.74570767,

0.89390964, 0.50845914, 0.62240528, 0.27255829, 0.03454648],

[ 1.04280525, 0.38307288, 0.81820526, 0.95947436, 1.21587846,

0.82568302, 0.60475185, 1.09188758, 1.40998237, 0.7291101 ,

0.94030203, 0.95715236, 0.6893489 , 1.26446965, 0.80080259,

0.96966515, 0.79117913, 0.48041062, 0.92280092, 1.27883349]])

For instance on these data, the best arm is clearly the third one, with expected reward of \(\mu^* = \max_k \mu_k = 0.9\).

Mathematical notations for piecewise stationary problems¶

Now we fix the horizon \(T\in\mathbb{N}\), \(T\geq1\) and we also consider a set of \(\Upsilon_T\) break points, \(\tau_1,\dots,\tau_{\Upsilon_T} \in\{1,\dots,T\}\). We denote \(\tau_0 = 0\) and \(\tau_{\Upsilon_T+1} = T\) for convenience of notations. We can assume that breakpoints are far “enough” from each other, for instance that there exists an integer \(N\in\mathbb{N},N\geq1\) such that \(\min_{i=0}^{\Upsilon_T} \tau_{i+1} - \tau_i \geq N K\). That is, on each stationary interval, a uniform sampling of the \(K\) arms gives at least \(N\) samples by arm.

Now, in any stationary interval \([\tau_i + 1, \tau_{i+1}]\), the \(K \geq 1\) arms are distributions \(\nu_k^{(i)}\). We focus on Bernoulli distributions, which are characterized by their means, \(\nu_k^{(i)} := \mathcal{B}(\mu_k^{(i)})\) for \(\mu_k^{(i)}\in[0,1]\). A piecewise stationary bandit problem is defined here by the vector \([\mu_k^{(i)}]_{1\leq k \leq K, 1 \leq i \leq \Upsilon_T}\).

For a fixed problem and a horizon \(T\in\mathbb{N}\), \(T\geq1\), we draw samples from the \(K\) distributions to get data: \(\forall t, r_k(t) \sim \nu_k^{(i)}\) for \(i\) the unique index of stationary interval such that \(t\in[\tau_i + 1, \tau_{i+1}]\).

Generating fake piecewise stationary data¶

The format to define piecewise stationary problem will be the following. It is compact but generic!

The first example considers a unique arm, with 2 breakpoints uniformly spaced. - On the first interval, for instance from \(t=1\) to \(t=500\), that is \(\tau_1 = 500\), \(\mu_1^{(1)} = 0.1\), - On the second interval, for instance from \(t=501\) to \(t=1000\), that is \(\tau_2 = 100\), \(\mu_1^{(2)} = 0.5\), - On the third interval, for instance from \(t=1001\) to \(t=1500\), that \(\mu_1^{(3)} = 0.9\).

[53]:

# With 1 arm only!

problem_piecewise_0 = lambda horizon: {

"listOfMeans": [

[0.1], # 0 to 499

[0.5], # 500 to 999

[0.8], # 1000 to 1499

],

"changePoints": [

int(0 * horizon / 1500.0),

int(500 * horizon / 1500.0),

int(1000 * horizon / 1500.0),

],

}

[54]:

# With 2 arms

problem_piecewise_1 = lambda horizon: {

"listOfMeans": [

[0.1, 0.2], # 0 to 399

[0.1, 0.3], # 400 to 799

[0.5, 0.3], # 800 to 1199

[0.4, 0.3], # 1200 to 1599

[0.3, 0.9], # 1600 to end

],

"changePoints": [

int(0 * horizon / 2000.0),

int(400 * horizon / 2000.0),

int(800 * horizon / 2000.0),

int(1200 * horizon / 2000.0),

int(1600 * horizon / 2000.0),

],

}

[55]:

# With 3 arms

problem_piecewise_2 = lambda horizon: {

"listOfMeans": [

[0.2, 0.5, 0.9], # 0 to 399

[0.2, 0.2, 0.9], # 400 to 799

[0.2, 0.2, 0.1], # 800 to 1199

[0.7, 0.2, 0.1], # 1200 to 1599

[0.7, 0.5, 0.1], # 1600 to end

],

"changePoints": [

int(0 * horizon / 2000.0),

int(400 * horizon / 2000.0),

int(800 * horizon / 2000.0),

int(1200 * horizon / 2000.0),

int(1600 * horizon / 2000.0),

],

}

[56]:

# With 3 arms

problem_piecewise_3 = lambda horizon: {

"listOfMeans": [

[0.4, 0.5, 0.9], # 0 to 399

[0.5, 0.4, 0.7], # 400 to 799

[0.6, 0.3, 0.5], # 800 to 1199

[0.7, 0.2, 0.3], # 1200 to 1599

[0.8, 0.1, 0.1], # 1600 to end

],

"changePoints": [

int(0 * horizon / 2000.0),

int(400 * horizon / 2000.0),

int(800 * horizon / 2000.0),

int(1200 * horizon / 2000.0),

int(1600 * horizon / 2000.0),

],

}

Now we can write a utility function that transform this compact representation into a full list of means.

[57]:

def getFullHistoryOfMeans(problem, horizon=2000):

"""Return the vector of mean of the arms, for a piece-wise stationary MAB.

- It is a numpy array of shape (nbArms, horizon).

"""

pb = problem(horizon)

listOfMeans, changePoints = pb['listOfMeans'], pb['changePoints']

nbArms = len(listOfMeans[0])

if horizon is None:

horizon = np.max(changePoints)

meansOfArms = np.ones((nbArms, horizon))

for armId in range(nbArms):

nbChangePoint = 0

for t in range(horizon):

if nbChangePoint < len(changePoints) - 1 and t >= changePoints[nbChangePoint + 1]:

nbChangePoint += 1

meansOfArms[armId][t] = listOfMeans[nbChangePoint][armId]

return meansOfArms

For examples :

[16]:

getFullHistoryOfMeans(problem_piecewise_0, horizon=50)

[16]:

array([[0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8,

0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8]])

[17]:

getFullHistoryOfMeans(problem_piecewise_1, horizon=50)

[17]:

array([[0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4,

0.4, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3],

[0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.3, 0.3, 0.3,

0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3,

0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3,

0.3, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9]])

[18]:

getFullHistoryOfMeans(problem_piecewise_2, horizon=50)

[18]:

array([[0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2,

0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2,

0.2, 0.2, 0.2, 0.2, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7,

0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7],

[0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.2, 0.2, 0.2,

0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2,

0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2,

0.2, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5],

[0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9,

0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1]])

[19]:

getFullHistoryOfMeans(problem_piecewise_3, horizon=50)

[19]:

array([[0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.6, 0.6, 0.6, 0.6, 0.6, 0.6,

0.6, 0.6, 0.6, 0.6, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7,

0.7, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8],

[0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.4, 0.4, 0.4,

0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3,

0.3, 0.3, 0.3, 0.3, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2, 0.2,

0.2, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1],

[0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.9, 0.7, 0.7, 0.7,

0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.7, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3, 0.3,

0.3, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1]])

And now we need to be able to generate samples from such distributions.

[58]:

def piecewise_bernoulli_samples(problem, horizon=1000):

fullMeans = getFullHistoryOfMeans(problem, horizon=horizon)

nbArms, horizon = np.shape(fullMeans)

results = np.zeros((nbArms, horizon))

for i in range(nbArms):

mean_i = fullMeans[i, :]

for t in range(horizon):

mean_i_t = max(0, min(1, mean_i[t])) # crop to [0, 1] !

results[i, t] = np.random.binomial(1, mean_i_t)

return results

[59]:

def piecewise_gaussian_samples(problem, horizon=1000, sigma=sigma):

fullMeans = getFullHistoryOfMeans(problem, horizon=horizon)

nbArms, horizon = np.shape(fullMeans)

results = np.zeros((nbArms, horizon))

for i in range(nbArms):

mean_i = fullMeans[i, :]

for t in range(horizon):

mean_i_t = mean_i[t]

results[i, t] = np.random.normal(loc=mean_i_t, scale=sigma, size=1)

return results

Examples:

[22]:

getFullHistoryOfMeans(problem_piecewise_0, horizon=100)

piecewise_bernoulli_samples(problem_piecewise_0, horizon=100)

[22]:

array([[0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1,

0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.1, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5,

0.5, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8,

0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8,

0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8, 0.8]])

[22]:

array([[0., 1., 0., 1., 0., 1., 1., 0., 0., 0., 1., 0., 0., 0., 0., 0.,

1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

1., 1., 1., 1., 0., 0., 1., 0., 0., 0., 1., 0., 0., 1., 0., 1.,

0., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1., 0., 0., 0., 1., 0.,

0., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 0.,

0., 1., 0., 1.]])

[23]:

piecewise_gaussian_samples(problem_piecewise_0, horizon=100)

[23]:

array([[ 0.00315559, 0.2159303 , 0.01763935, -0.09514176, 0.07699997,

0.12997326, 0.36806044, 0.26968036, -0.29470607, -0.12074206,

0.38758995, 0.09229431, -0.1390067 , 0.01674725, 0.29248761,

-0.00743648, -0.32053746, -0.05338394, -0.22795571, -0.07397954,

0.12089186, -0.05089131, 0.07538026, 0.37811067, 0.28527238,

-0.44775343, 0.05399375, 0.56363279, 0.12052245, 0.04308359,

0.05259644, -0.09940602, 0.59624864, 0.47309089, 0.73371138,

0.71548802, 0.58391054, 0.57838614, 0.84475862, 0.17150277,

0.08094113, 0.12821665, 0.9622493 , 0.46279281, 0.69436121,

0.67516175, 0.63060937, 0.3242004 , 0.46987869, 0.87444974,

0.72351635, 0.13288009, 0.60558045, 0.42477301, 0.08474411,

0.28108802, 0.38570242, 0.16206467, 0.45767589, 0.62731769,

0.50540122, 0.09523314, 0.84005644, 0.29788404, 0.22493549,

0.43068172, 1.01212527, 0.66926627, 1.12914167, 0.56228928,

0.29419603, 0.83918851, 0.55743231, 1.2773794 , 0.47145187,

0.5581184 , 0.69071478, 0.78587582, 0.66516129, 0.13434453,

0.70156 , 1.00476689, 0.70888586, 0.61272042, 0.47476143,

0.52100125, 0.54323956, 0.84789493, 0.54069643, 1.35941397,

0.93167352, 0.85845656, 1.00137879, 0.97582926, 0.83642737,

0.63083642, 0.78315512, 0.92622805, 0.63902527, 0.48292036]])

We easily spot the (approximate) location of the breakpoint!

Another example:

[24]:

piecewise_bernoulli_samples(problem_piecewise_1, horizon=100)

[24]:

array([[0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 1., 1., 0., 0., 0., 0., 0., 1., 0., 1.,

0., 0., 0., 0., 1., 1., 1., 1., 1., 0., 0., 1., 1., 0., 0., 1.,

0., 1., 0., 1., 1., 0., 1., 0., 0., 0., 0., 1., 0., 0., 0., 0.,

0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 1., 1., 1.],

[0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 1., 1., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 1., 1., 1., 0., 0., 0., 1., 0., 0., 1.,

1., 0., 1., 0., 1., 0., 0., 1., 0., 0., 1., 0., 1., 0., 0., 0.,

0., 0., 0., 0., 1., 0., 0., 1., 0., 0., 1., 0., 1., 0., 0., 0.,

1., 0., 1., 0., 0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

1., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1.]])

[25]:

piecewise_gaussian_samples(problem_piecewise_1, horizon=20)

[25]:

array([[-0.03440385, 0.35339867, 0.39487031, 0.03900667, -0.04061467,

0.42847871, 0.12854273, 0.11698151, 0.53333388, 0.55173192,

0.24042019, 0.6838909 , 0.64939741, 0.9333824 , 0.9194079 ,

0.49387316, 0.32736459, 0.2525435 , 0.37381781, 0.55302675],

[ 0.28821344, 0.18003026, 0.25372301, 0.40054737, 0.55935287,

-0.38328144, 0.06330063, 0.12570344, 0.46626937, 0.12882954,

0.35432014, 0.31273292, 0.90022341, 0.23341473, 0.0748465 ,

0.4811396 , 1.02582958, 0.99077802, 1.07355786, 0.48020466]])

Python implementations of some statistical tests¶

I will implement here the following statistical tests. I give a link to the implementation of the correspond bandit policy in my framework `SMPyBandits <https://smpybandits.github.io/>`__

Monitored (based on a McDiarmid inequality), for Monitored-UCB or

`M-UCB<>`__,CUSUM, for

`CUSUM-UCB<https://smpybandits.github.io/docs/Policies.CD_UCB.html?highlight=cusum#Policies.CD_UCB.CUSUM_IndexPolicy>`__,PHT, for

`PHT-UCB<https://smpybandits.github.io/docs/Policies.CD_UCB.html?highlight=cusum#Policies.CD_UCB.PHT_IndexPolicy>`__,Gaussian GLR, for

`GaussianGLR-UCB<https://smpybandits.github.io/docs/Policies.CD_UCB.html?highlight=glr#Policies.CD_UCB.GaussianGLR_IndexPolicy>`__,Bernoulli GLR, for

`BernoulliGLR-UCB<https://smpybandits.github.io/docs/Policies.CD_UCB.html?highlight=glr#Policies.CD_UCB.BernoulliGLR_IndexPolicy>`__.

[60]:

class ChangePointDetector(object):

def __init__(self, **kwargs):

self._kwargs = kwargs

for key, value in kwargs.items():

setattr(self, key, value)

def __str__(self):

return f"{self.__class__.__name__}{f'({repr(self._kwargs)})' if self._kwargs else ''}"

def detect(self, all_data, t):

raise NotImplementedError

Having classes is simply to be able to pretty print the algorithms when they have parameters:

[27]:

print(ChangePointDetector())

ChangePointDetector

[28]:

print(ChangePointDetector(w=10, b=1))

ChangePointDetector({'w': 10, 'b': 1})

Monitored¶

It uses a McDiarmid inequality. For a (pair) window size \(w\in\mathbb{N}\) and a threshold \(b\in\mathbb{R}^+\). At time \(t\), if there is at least \(w\) data in the data vector \((X_i)_i\), then let \(Y\) denote the last \(w\) data. A change is detected if

[64]:

NB_ARMS = 1

WINDOW_SIZE = 80

[65]:

import numba

[88]:

class Monitored(ChangePointDetector):

def __init__(self, window_size=WINDOW_SIZE, threshold_b=None):

super().__init__(window_size=window_size, threshold_b=threshold_b)

def __str__(self):

if self.threshold_b:

return f"Monitored($w={self.window_size:.3g}$, $b={self.threshold_b:.3g}$)"

else:

latexname = r"\sqrt{\frac{w}{2} \log(2 T^2)}"

return f"Monitored($w={self.window_size:.3g}$, $b={latexname}$)"

def detect(self, all_data, t):

r""" A change is detected for the current arm if the following test is true:

.. math:: |\sum_{i=w/2+1}^{w} Y_i - \sum_{i=1}^{w/2} Y_i | > b ?

- where :math:`Y_i` is the i-th data in the latest w data from this arm (ie, :math:`X_k(t)` for :math:`t = n_k - w + 1` to :math:`t = n_k` current number of samples from arm k).

- where :attr:`threshold_b` is the threshold b of the test, and :attr:`window_size` is the window-size w.

"""

data = all_data[:t]

# don't try to detect change if there is not enough data!

if len(data) < self.window_size:

return False

# compute parameters

horizon = len(all_data)

threshold_b = self.threshold_b

if threshold_b is None:

threshold_b = np.sqrt(self.window_size/2 * np.log(2 * NB_ARMS * horizon**2))

last_w_data = data[-self.window_size:]

sum_first_half = np.sum(last_w_data[:self.window_size//2])

sum_second_half = np.sum(last_w_data[self.window_size//2:])

return abs(sum_first_half - sum_second_half) > threshold_b

CUSUM¶

The two-sided CUSUM algorithm, from [Page, 1954], works like this:

For each data k, compute:

The change is detected if \(\max(g_k^+, g_k^-) > h\), where \(h=\)

threshold_his the threshold of the test,And \(\hat{u}_0 = \frac{1}{M} \sum_{k=1}^{M} y_k\) is the mean of the first M samples, where M is

Mthe min number of observation between change points.

[67]:

#: Precision of the test.

EPSILON = 0.5

#: Default value of :math:`\lambda`.

LAMBDA = 1

#: Hypothesis on the speed of changes: between two change points, there is at least :math:`M * K` time steps, where K is the number of arms, and M is this constant.

MIN_NUMBER_OF_OBSERVATION_BETWEEN_CHANGE_POINT = 50

MAX_NB_RANDOM_EVENTS = 1

[68]:

from scipy.special import comb

[89]:

def compute_h__CUSUM(horizon,

verbose=False,

M=MIN_NUMBER_OF_OBSERVATION_BETWEEN_CHANGE_POINT,

max_nb_random_events=MAX_NB_RANDOM_EVENTS,

nbArms=1,

epsilon=EPSILON,

lmbda=LAMBDA,

):

r""" Compute the values :math:`C_1^+, C_1^-, C_1, C_2, h` from the formulas in Theorem 2 and Corollary 2 in the paper."""

T = int(max(1, horizon))

UpsilonT = int(max(1, max_nb_random_events))

K = int(max(1, nbArms))

C1_minus = np.log(((4 * epsilon) / (1-epsilon)**2) * comb(M, int(np.floor(2 * epsilon * M))) * (2 * epsilon)**M + 1)

C1_plus = np.log(((4 * epsilon) / (1+epsilon)**2) * comb(M, int(np.ceil(2 * epsilon * M))) * (2 * epsilon)**M + 1)

C1 = min(C1_minus, C1_plus)

if C1 == 0: C1 = 1 # FIXME

h = 1/C1 * np.log(T / UpsilonT)

return h

[151]:

class CUSUM(ChangePointDetector):

def __init__(self,

epsilon=EPSILON,

M=MIN_NUMBER_OF_OBSERVATION_BETWEEN_CHANGE_POINT,

threshold_h=None,

):

assert 0 < epsilon < 1, f"Error: epsilon for CUSUM must be in (0, 1) but is {epsilon}."

super().__init__(epsilon=epsilon, M=M, threshold_h=threshold_h)

def __str__(self):

if self.threshold_h:

return fr"CUSUM($\varepsilon={self.epsilon:.3g}$, $M={self.M}$, $h={self.threshold_h:.3g}$)"

else:

return fr"CUSUM($\varepsilon={self.epsilon:.3g}$, $M={self.M}$, $h=$'auto')"

def detect(self, all_data, t):

r""" Detect a change in the current arm, using the two-sided CUSUM algorithm [Page, 1954].

- For each *data* k, compute:

.. math::

s_k^- &= (y_k - \hat{u}_0 - \varepsilon) 1(k > M),\\

s_k^+ &= (\hat{u}_0 - y_k - \varepsilon) 1(k > M),\\

g_k^+ &= \max(0, g_{k-1}^+ + s_k^+),\\

g_k^- &= \max(0, g_{k-1}^- + s_k^-).

- The change is detected if :math:`\max(g_k^+, g_k^-) > h`, where :attr:`threshold_h` is the threshold of the test,

- And :math:`\hat{u}_0 = \frac{1}{M} \sum_{k=1}^{M} y_k` is the mean of the first M samples, where M is :attr:`M` the min number of observation between change points.

"""

data = all_data[:t]

# compute parameters

horizon = len(all_data)

threshold_h = self.threshold_h

if self.threshold_h is None:

threshold_h = compute_h__CUSUM(horizon, self.M, 1, epsilon=self.epsilon)

gp, gm = 0, 0

# First we use the first M samples to calculate the average :math:`\hat{u_0}`.

u0hat = np.mean(data[:self.M])

for k in range(self.M + 1, len(data)):

y_k = data[k]

sp = u0hat - y_k - self.epsilon # no need to multiply by (k > self.M)

sm = y_k - u0hat - self.epsilon # no need to multiply by (k > self.M)

gp = max(0, gp + sp)

gm = max(0, gm + sm)

if max(gp, gm) >= threshold_h:

return True

return False

PHT¶

The two-sided CUSUM algorithm, from [Hinkley, 1971], works like this:

For each data k, compute:

The change is detected if \(\max(g_k^+, g_k^-) > h\), where \(h=\)

threshold_his the threshold of the test,And \(\hat{y}_k = \frac{1}{k} \sum_{s=1}^{k} y_s\) is the mean of the first k samples.

[152]:

class PHT(ChangePointDetector):

def __init__(self,

epsilon=EPSILON,

M=MIN_NUMBER_OF_OBSERVATION_BETWEEN_CHANGE_POINT,

threshold_h=None,

):

assert 0 < epsilon < 1, f"Error: epsilon for CUSUM must be in (0, 1) but is {epsilon}."

super().__init__(epsilon=epsilon, M=M, threshold_h=threshold_h)

def __str__(self):

if self.threshold_h:

return fr"PHT($\varepsilon={self.epsilon:.3g}$, $M={self.M}$, $h={self.threshold_h:.3g}$)"

else:

return fr"PHT($\varepsilon={self.epsilon:.3g}$, $M={self.M}$, $h=$'auto')"

def detect(self, all_data, t):

r""" Detect a change in the current arm, using the two-sided PHT algorithm [Hinkley, 1971].

- For each *data* k, compute:

.. math::

s_k^- &= y_k - \hat{y}_k - \varepsilon,\\

s_k^+ &= \hat{y}_k - y_k - \varepsilon,\\

g_k^+ &= \max(0, g_{k-1}^+ + s_k^+),\\

g_k^- &= \max(0, g_{k-1}^- + s_k^-).

- The change is detected if :math:`\max(g_k^+, g_k^-) > h`, where :attr:`threshold_h` is the threshold of the test,

- And :math:`\hat{y}_k = \frac{1}{k} \sum_{s=1}^{k} y_s` is the mean of the first k samples.

"""

data = all_data[:t]

# compute parameters

horizon = len(all_data)

threshold_h = self.threshold_h

if threshold_h is None:

threshold_h = compute_h__CUSUM(horizon, self.M, 1, epsilon=self.epsilon)

gp, gm = 0, 0

y_k_hat = 0

# First we use the first M samples to calculate the average :math:`\hat{u_0}`.

for k, y_k in enumerate(data):

y_k_hat = (k * y_k_hat + y_k) / (k + 1) # XXX smart formula to update the mean!

sp = y_k_hat - y_k - self.epsilon

sm = y_k - y_k_hat - self.epsilon

gp = max(0, gp + sp)

gm = max(0, gm + sm)

if max(gp, gm) >= threshold_h:

return True

return False

Gaussian GLR¶

The Generalized Likelihood Ratio test (GLR) works with a one-dimensional exponential family, for which we have a function kl such that if \(\mu_1,\mu_2\) are the means of two distributions \(\nu_1,\nu_2\), then \(\mathrm{KL}(\mathcal{D}(\nu_1), \mathcal{D}(\nu_1))=\) kl \((\mu_1,\mu_2)\).

For each time step \(s\) between \(t_0=0\) and \(t\), compute:

\[G^{\mathcal{N}_1}_{t_0:s:t} = (s-t_0+1) \mathrm{kl}(\mu_{t_0,s}, \mu_{t_0,t}) + (t-s) \mathrm{kl}(\mu_{s+1,t}, \mu_{t_0,t}).\]The change is detected if there is a time \(s\) such that \(G^{\mathcal{N}_1}_{t_0:s:t} > b(t_0, s, t, \delta)\), where \(b(t_0, s, t, \delta)=\)

threshold_his the threshold of the test,And \(\mu_{a,b} = \frac{1}{b-a+1} \sum_{s=a}^{b} y_s\) is the mean of the samples between \(a\) and \(b\).

The threshold is computed as:

Another threshold we want to check is the following:

[124]:

from math import log, isinf

def compute_c__GLR_0(t0, s, t, horizon=None, delta=None):

r""" Compute the values :math:`c` from the corollary of of Theorem 2 from ["Sequential change-point detection: Laplace concentration of scan statistics and non-asymptotic delay bounds", O.-A. Maillard, 2018].

- The threshold is computed as:

.. math:: h := \left(1 + \frac{1}{t - t_0 + 1}\right) 2 \log\left(\frac{2 (t - t_0) \sqrt{(t - t_0) + 2}}{\delta}\right).

"""

if delta is None:

T = int(max(1, horizon))

delta = 1.0 / T

t_m_t0 = abs(t - t0)

c = (1 + (1 / (t_m_t0 + 1.0))) * 2 * log((2 * t_m_t0 * np.sqrt(t_m_t0 + 2)) / delta)

if c < 0 and isinf(c): c = float('+inf')

return c

[125]:

from math import log, isinf

def compute_c__GLR(t0, s, t, horizon=None, delta=None):

r""" Compute the values :math:`c` from the corollary of of Theorem 2 from ["Sequential change-point detection: Laplace concentration of scan statistics and non-asymptotic delay bounds", O.-A. Maillard, 2018].

- The threshold is computed as:

.. math:: h := \log\left(\frac{(s - t_0 + 1) (t - s)}{\delta}\right).

"""

if delta is None:

T = int(max(1, horizon))

delta = 1.0 / T

arg = (s - t0 + 1) * (t - s) / delta

if arg <= 0: c = float('+inf')

else: c = log(arg)

return c

For Gaussian distributions of known variance, the Kullback-Leibler divergence is easy to compute:

Kullback-Leibler divergence for Gaussian distributions of means \(x\) and \(y\) and variances \(\sigma^2_x = \sigma^2_y\), \(\nu_1 = \mathcal{N}(x, \sigma_x^2)\) and \(\nu_2 = \mathcal{N}(y, \sigma_x^2)\) is:

[126]:

def klGauss(x, y, sig2x=0.25):

r""" Kullback-Leibler divergence for Gaussian distributions of means ``x`` and ``y`` and variances ``sig2x`` and ``sig2y``, :math:`\nu_1 = \mathcal{N}(x, \sigma_x^2)` and :math:`\nu_2 = \mathcal{N}(y, \sigma_x^2)`:

.. math:: \mathrm{KL}(\nu_1, \nu_2) = \frac{(x - y)^2}{2 \sigma_y^2} + \frac{1}{2}\left( \frac{\sigma_x^2}{\sigma_y^2} - 1 \log\left(\frac{\sigma_x^2}{\sigma_y^2}\right) \right).

See https://en.wikipedia.org/wiki/Normal_distribution#Other_properties

- sig2y = sig2x (same variance).

"""

return (x - y) ** 2 / (2. * sig2x)

[127]:

class GaussianGLR(ChangePointDetector):

def __init__(self, mult_threshold_h=1, delta=None):

super().__init__(mult_threshold_h=mult_threshold_h, delta=delta)

def __str__(self):

return r"Gaussian-GLR($h_0={}$, $\delta={}$)".format(

f"{self.mult_threshold_h:.3g}" if self.mult_threshold_h is not None else 'auto',

f"{self.delta:.3g}" if self.delta is not None else 'auto',

)

def detect(self, all_data, t):

r""" Detect a change in the current arm, using the Generalized Likelihood Ratio test (GLR) and the :attr:`kl` function.

- For each *time step* :math:`s` between :math:`t_0=0` and :math:`t`, compute:

.. math::

G^{\mathcal{N}_1}_{t_0:s:t} = (s-t_0+1)(t-s) \mathrm{kl}(\mu_{s+1,t}, \mu_{t_0,s}) / (t-t_0+1).

- The change is detected if there is a time :math:`s` such that :math:`G^{\mathcal{N}_1}_{t_0:s:t} > h`, where :attr:`threshold_h` is the threshold of the test,

- And :math:`\mu_{a,b} = \frac{1}{b-a+1} \sum_{s=a}^{b} y_s` is the mean of the samples between :math:`a` and :math:`b`.

"""

data = all_data[:t]

t0 = 0

horizon = len(all_data)

# compute parameters

mean_all = np.mean(data[t0 : t+1])

mean_before = 0

mean_after = mean_all

for s in range(t0, t):

# DONE okay this is efficient we don't compute the same means too many times!

y = data[s]

mean_before = (s * mean_before + y) / (s + 1)

mean_after = ((t + 1 - s + t0) * mean_after - y) / (t - s + t0)

kl_before = klGauss(mean_before, mean_all)

kl_after = klGauss(mean_after, mean_all)

threshold_h = self.mult_threshold_h * compute_c__GLR(t0, s, t, horizon=horizon, delta=self.delta)

glr = (s - t0 + 1) * kl_before + (t - s) * kl_after

if glr >= threshold_h:

return True

return False

Bernoulli GLR¶

The same GLR algorithm but using the Bernoulli KL, given by:

[257]:

import cython

%load_ext cython

The cython extension is already loaded. To reload it, use:

%reload_ext cython

[265]:

def klBern(x: float, y: float) -> float:

r""" Kullback-Leibler divergence for Bernoulli distributions. https://en.wikipedia.org/wiki/Bernoulli_distribution#Kullback.E2.80.93Leibler_divergence

.. math:: \mathrm{KL}(\mathcal{B}(x), \mathcal{B}(y)) = x \log(\frac{x}{y}) + (1-x) \log(\frac{1-x}{1-y})."""

x = min(max(x, 1e-6), 1 - 1e-6)

y = min(max(y, 1e-6), 1 - 1e-6)

return x * log(x / y) + (1 - x) * log((1 - x) / (1 - y))

[259]:

%timeit klBern(np.random.random(), np.random.random())

1.91 µs ± 107 ns per loop (mean ± std. dev. of 7 runs, 1000000 loops each)

[260]:

%%cython --annotate

from libc.math cimport log

eps = 1e-6 #: Threshold value: everything in [0, 1] is truncated to [eps, 1 - eps]

def klBern_cython(float x, float y) -> float:

r""" Kullback-Leibler divergence for Bernoulli distributions. https://en.wikipedia.org/wiki/Bernoulli_distribution#Kullback.E2.80.93Leibler_divergence

.. math:: \mathrm{KL}(\mathcal{B}(x), \mathcal{B}(y)) = x \log(\frac{x}{y}) + (1-x) \log(\frac{1-x}{1-y})."""

x = min(max(x, 1e-6), 1 - 1e-6)

y = min(max(y, 1e-6), 1 - 1e-6)

return x * log(x / y) + (1 - x) * log((1 - x) / (1 - y))

[260]:

Generated by Cython 0.29.1

Yellow lines hint at Python interaction.

Click on a line that starts with a "+" to see the C code that Cython generated for it.

01: from libc.math cimport log

+02: eps = 1e-6 #: Threshold value: everything in [0, 1] is truncated to [eps, 1 - eps]

if (PyDict_SetItem(__pyx_d, __pyx_n_s_eps, __pyx_float_1eneg_6) < 0) __PYX_ERR(0, 2, __pyx_L1_error)

03:

+04: def klBern(float x, float y) -> float:

/* Python wrapper */

static PyObject *__pyx_pw_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_1klBern(PyObject *__pyx_self, PyObject *__pyx_args, PyObject *__pyx_kwds); /*proto*/

static char __pyx_doc_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_klBern[] = " Kullback-Leibler divergence for Bernoulli distributions. https://en.wikipedia.org/wiki/Bernoulli_distribution#Kullback.E2.80.93Leibler_divergence\n\n .. math:: \\mathrm{KL}(\\mathcal{B}(x), \\mathcal{B}(y)) = x \\log(\\frac{x}{y}) + (1-x) \\log(\\frac{1-x}{1-y}).";

static PyMethodDef __pyx_mdef_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_1klBern = {"klBern", (PyCFunction)(void*)(PyCFunctionWithKeywords)__pyx_pw_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_1klBern, METH_VARARGS|METH_KEYWORDS, __pyx_doc_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_klBern};

static PyObject *__pyx_pw_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_1klBern(PyObject *__pyx_self, PyObject *__pyx_args, PyObject *__pyx_kwds) {

float __pyx_v_x;

float __pyx_v_y;

PyObject *__pyx_r = 0;

__Pyx_RefNannyDeclarations

__Pyx_RefNannySetupContext("klBern (wrapper)", 0);

{

static PyObject **__pyx_pyargnames[] = {&__pyx_n_s_x,&__pyx_n_s_y,0};

PyObject* values[2] = {0,0};

if (unlikely(__pyx_kwds)) {

Py_ssize_t kw_args;

const Py_ssize_t pos_args = PyTuple_GET_SIZE(__pyx_args);

switch (pos_args) {

case 2: values[1] = PyTuple_GET_ITEM(__pyx_args, 1);

CYTHON_FALLTHROUGH;

case 1: values[0] = PyTuple_GET_ITEM(__pyx_args, 0);

CYTHON_FALLTHROUGH;

case 0: break;

default: goto __pyx_L5_argtuple_error;

}

kw_args = PyDict_Size(__pyx_kwds);

switch (pos_args) {

case 0:

if (likely((values[0] = __Pyx_PyDict_GetItemStr(__pyx_kwds, __pyx_n_s_x)) != 0)) kw_args--;

else goto __pyx_L5_argtuple_error;

CYTHON_FALLTHROUGH;

case 1:

if (likely((values[1] = __Pyx_PyDict_GetItemStr(__pyx_kwds, __pyx_n_s_y)) != 0)) kw_args--;

else {

__Pyx_RaiseArgtupleInvalid("klBern", 1, 2, 2, 1); __PYX_ERR(0, 4, __pyx_L3_error)

}

}

if (unlikely(kw_args > 0)) {

if (unlikely(__Pyx_ParseOptionalKeywords(__pyx_kwds, __pyx_pyargnames, 0, values, pos_args, "klBern") < 0)) __PYX_ERR(0, 4, __pyx_L3_error)

}

} else if (PyTuple_GET_SIZE(__pyx_args) != 2) {

goto __pyx_L5_argtuple_error;

} else {

values[0] = PyTuple_GET_ITEM(__pyx_args, 0);

values[1] = PyTuple_GET_ITEM(__pyx_args, 1);

}

__pyx_v_x = __pyx_PyFloat_AsFloat(values[0]); if (unlikely((__pyx_v_x == (float)-1) && PyErr_Occurred())) __PYX_ERR(0, 4, __pyx_L3_error)

__pyx_v_y = __pyx_PyFloat_AsFloat(values[1]); if (unlikely((__pyx_v_y == (float)-1) && PyErr_Occurred())) __PYX_ERR(0, 4, __pyx_L3_error)

}

goto __pyx_L4_argument_unpacking_done;

__pyx_L5_argtuple_error:;

__Pyx_RaiseArgtupleInvalid("klBern", 1, 2, 2, PyTuple_GET_SIZE(__pyx_args)); __PYX_ERR(0, 4, __pyx_L3_error)

__pyx_L3_error:;

__Pyx_AddTraceback("_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a.klBern", __pyx_clineno, __pyx_lineno, __pyx_filename);

__Pyx_RefNannyFinishContext();

return NULL;

__pyx_L4_argument_unpacking_done:;

__pyx_r = __pyx_pf_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_klBern(__pyx_self, __pyx_v_x, __pyx_v_y);

/* function exit code */

__Pyx_RefNannyFinishContext();

return __pyx_r;

}

static PyObject *__pyx_pf_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_klBern(CYTHON_UNUSED PyObject *__pyx_self, float __pyx_v_x, float __pyx_v_y) {

PyObject *__pyx_r = NULL;

__Pyx_RefNannyDeclarations

__Pyx_RefNannySetupContext("klBern", 0);

/* … */

/* function exit code */

__pyx_L1_error:;

__Pyx_XDECREF(__pyx_t_6);

__Pyx_AddTraceback("_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a.klBern", __pyx_clineno, __pyx_lineno, __pyx_filename);

__pyx_r = NULL;

__pyx_L0:;

__Pyx_XGIVEREF(__pyx_r);

__Pyx_RefNannyFinishContext();

return __pyx_r;

}

/* … */

__pyx_tuple_ = PyTuple_Pack(2, __pyx_n_s_x, __pyx_n_s_y); if (unlikely(!__pyx_tuple_)) __PYX_ERR(0, 4, __pyx_L1_error)

__Pyx_GOTREF(__pyx_tuple_);

__Pyx_GIVEREF(__pyx_tuple_);

/* … */

__pyx_t_1 = PyCFunction_NewEx(&__pyx_mdef_46_cython_magic_e1f92aa869fc57ff4f0ff63e582d134a_1klBern, NULL, __pyx_n_s_cython_magic_e1f92aa869fc57ff4f); if (unlikely(!__pyx_t_1)) __PYX_ERR(0, 4, __pyx_L1_error)

__Pyx_GOTREF(__pyx_t_1);

if (PyDict_SetItem(__pyx_d, __pyx_n_s_klBern, __pyx_t_1) < 0) __PYX_ERR(0, 4, __pyx_L1_error)

__Pyx_DECREF(__pyx_t_1); __pyx_t_1 = 0;

05: r""" Kullback-Leibler divergence for Bernoulli distributions. https://en.wikipedia.org/wiki/Bernoulli_distribution#Kullback.E2.80.93Leibler_divergence

06:

07: .. math:: \mathrm{KL}(\mathcal{B}(x), \mathcal{B}(y)) = x \log(\frac{x}{y}) + (1-x) \log(\frac{1-x}{1-y})."""

+08: x = min(max(x, 1e-6), 1 - 1e-6)

__pyx_t_1 = (1.0 - 1e-6);

__pyx_t_2 = 1e-6;

__pyx_t_3 = __pyx_v_x;

if (((__pyx_t_2 > __pyx_t_3) != 0)) {

__pyx_t_4 = __pyx_t_2;

} else {

__pyx_t_4 = __pyx_t_3;

}

__pyx_t_2 = __pyx_t_4;

if (((__pyx_t_1 < __pyx_t_2) != 0)) {

__pyx_t_4 = __pyx_t_1;

} else {

__pyx_t_4 = __pyx_t_2;

}

__pyx_v_x = __pyx_t_4;

+09: y = min(max(y, 1e-6), 1 - 1e-6)

__pyx_t_4 = (1.0 - 1e-6);

__pyx_t_1 = 1e-6;

__pyx_t_3 = __pyx_v_y;

if (((__pyx_t_1 > __pyx_t_3) != 0)) {

__pyx_t_2 = __pyx_t_1;

} else {

__pyx_t_2 = __pyx_t_3;

}

__pyx_t_1 = __pyx_t_2;

if (((__pyx_t_4 < __pyx_t_1) != 0)) {

__pyx_t_2 = __pyx_t_4;

} else {

__pyx_t_2 = __pyx_t_1;

}

__pyx_v_y = __pyx_t_2;

+10: return x * log(x / y) + (1 - x) * log((1 - x) / (1 - y))

__Pyx_XDECREF(__pyx_r); if (unlikely(__pyx_v_y == 0)) { PyErr_SetString(PyExc_ZeroDivisionError, "float division"); __PYX_ERR(0, 10, __pyx_L1_error) } __pyx_t_3 = (1.0 - __pyx_v_x); __pyx_t_5 = (1.0 - __pyx_v_y); if (unlikely(__pyx_t_5 == 0)) { PyErr_SetString(PyExc_ZeroDivisionError, "float division"); __PYX_ERR(0, 10, __pyx_L1_error) } __pyx_t_6 = PyFloat_FromDouble(((__pyx_v_x * log((__pyx_v_x / __pyx_v_y))) + ((1.0 - __pyx_v_x) * log((__pyx_t_3 / __pyx_t_5))))); if (unlikely(!__pyx_t_6)) __PYX_ERR(0, 10, __pyx_L1_error) __Pyx_GOTREF(__pyx_t_6); __pyx_r = __pyx_t_6; __pyx_t_6 = 0; goto __pyx_L0;

[261]:

%timeit klBern_cython(np.random.random(), np.random.random())

900 ns ± 80.7 ns per loop (mean ± std. dev. of 7 runs, 1000000 loops each)

Now the class, with this optimized kl function.

[262]:

class BernoulliGLR(ChangePointDetector):

def __init__(self, mult_threshold_h=1, delta=None):

super().__init__(mult_threshold_h=mult_threshold_h, delta=delta)

def __str__(self):

return r"Bernoulli-GLR($h_0={}$, $\delta={}$)".format(

f"{self.mult_threshold_h:.3g}" if self.mult_threshold_h is not None else 'auto',

f"{self.delta:.3g}" if self.delta is not None else 'auto',

)

def detect(self, all_data, t):

r""" Detect a change in the current arm, using the Generalized Likelihood Ratio test (GLR) and the :attr:`kl` function.

- For each *time step* :math:`s` between :math:`t_0=0` and :math:`t`, compute:

.. math::

G^{\mathcal{N}_1}_{t_0:s:t} = (s-t_0+1)(t-s) \mathrm{kl}(\mu_{s+1,t}, \mu_{t_0,s}) / (t-t_0+1).

- The change is detected if there is a time :math:`s` such that :math:`G^{\mathcal{N}_1}_{t_0:s:t} > h`, where :attr:`threshold_h` is the threshold of the test,

- And :math:`\mu_{a,b} = \frac{1}{b-a+1} \sum_{s=a}^{b} y_s` is the mean of the samples between :math:`a` and :math:`b`.

"""

data = all_data[:t]

t0 = 0

horizon = len(all_data)

# compute parameters

mean_all = np.mean(data[t0 : t+1])

mean_before = 0

mean_after = mean_all

for s in range(t0, t):

# DONE okay this is efficient we don't compute the same means too many times!

y = data[s]

mean_before = (s * mean_before + y) / (s + 1)

mean_after = ((t + 1 - s + t0) * mean_after - y) / (t - s + t0)

kl_before = klBern(mean_before, mean_all)

kl_after = klBern(mean_after, mean_all)

threshold_h = self.mult_threshold_h * compute_c__GLR(t0, s, t, horizon=horizon, delta=self.delta)

glr = (s - t0 + 1) * kl_before + (t - s) * kl_after

if glr >= threshold_h:

return True

return False

Sub-Gaussian GLR¶

A slightly different GLR algorithm for non-parametric sub-Gaussian distributions. We assume the distributions \(\nu^1\) and \(\nu^2\) to be \(\sigma^2\)-sub Gaussian, for a known value of \(\sigma\in\mathbb{R}^+\), and if we consider a confidence level \(\delta\in(0,1)\) (typically, it is set to \(\frac{1}{T}\) if the horizon \(T\) is known, or \(\delta=\delta_t=\frac{1}{t^2}\) to have \(\sum_{t=1}{T} \delta_t < +\infty\)).

Then we consider the following test: the non-parametric sub-Gaussian Generalized Likelihood Ratio test (GLR) works like this:

For each time step \(s\) between \(t_0=0\) and \(t\), compute:

\[G^{\text{sub-}\sigma}_{t_0:s:t} = |\mu_{t_0,s} - \mu_{s+1,t}|.\]The change is detected if there is a time \(s\) such that \(G^{\text{sub-}\sigma}_{t_0:s:t} > b_{t_0}(s,t,\delta)\), where \(b_{t_0}(s,t,\delta)\) is the threshold of the test,

And \(\mu_{a,b} = \frac{1}{b-a+1} \sum_{s=a}^{b} y_s\) is the mean of the samples between \(a\) and \(b\).

The threshold is computed as either the “joint” variant:

or the “disjoint” variant:

[129]:

# Default confidence level?

DELTA = 0.01

# By default, assume distributions are 0.25-sub Gaussian, like Bernoulli

# or any distributions with support on [0,1]

SIGMA = 0.25

[130]:

# Whether to use the joint or disjoint threshold function

JOINT = True

[159]:

from math import log, sqrt

def threshold_SubGaussianGLR_joint(t0, s, t, delta=DELTA, sigma=SIGMA):

return sigma * sqrt(

(1.0 / (s - t0 + 1) + 1.0/(t - s)) * (1.0 + 1.0/(t - t0+1))

* 2 * max(0, log(( 2 * (t - t0) * sqrt(t - t0 + 2)) / delta ))

)

[160]:

from math import log, sqrt

def threshold_SubGaussianGLR_disjoint(t0, s, t, delta=DELTA, sigma=SIGMA):

return np.sqrt(2) * sigma * (sqrt(

((1.0 + (1.0 / (s - t0 + 1))) / (s - t0 + 1)) * max(0, log( (4 * sqrt(s - t0 + 2)) / delta ))

) + sqrt(

((1.0 + (1.0 / (t - s + 1))) / (t - s + 1)) * max(0, log( (4 * (t - t0) * sqrt(t - s + 1)) / delta ))

))

[161]:

def threshold_SubGaussianGLR(t0, s, t, delta=DELTA, sigma=SIGMA, joint=JOINT):

if joint:

return threshold_SubGaussianGLR_joint(t0, s, t, delta, sigma=sigma)

else:

return threshold_SubGaussianGLR_disjoint(t0, s, t, delta, sigma=sigma)

And now we can write the CD algorithm:

[162]:

class SubGaussianGLR(ChangePointDetector):

def __init__(self, delta=DELTA, sigma=SIGMA, joint=JOINT):

super().__init__(delta=delta, sigma=sigma, joint=joint)

def __str__(self):

return fr"SubGaussian-GLR($\delta=${self.delta:.3g}, $\sigma=${self.sigma:.3g}, {'joint' if self.joint else 'disjoint'})"

def detect(self, all_data, t):

r""" Detect a change in the current arm, using the non-parametric sub-Gaussian Generalized Likelihood Ratio test (GLR) works like this:

- For each *time step* :math:`s` between :math:`t_0=0` and :math:`t`, compute:

.. math:: G^{\text{sub-}\sigma}_{t_0:s:t} = |\mu_{t_0,s} - \mu_{s+1,t}|.

- The change is detected if there is a time :math:`s` such that :math:`G^{\text{sub-}\sigma}_{t_0:s:t} > b_{t_0}(s,t,\delta)`, where :math:`b_{t_0}(s,t,\delta)` is the threshold of the test,

The threshold is computed as:

.. math:: b_{t_0}(s,t,\delta) := \sigma \sqrt{ \left(\frac{1}{s-t_0+1} + \frac{1}{t-s}\right) \left(1 + \frac{1}{t-t_0+1}\right) 2 \log\left( \frac{2(t-t_0)\sqrt{t-t_0+2}}{\delta} \right)}.

- And :math:`\mu_{a,b} = \frac{1}{b-a+1} \sum_{s=a}^{b} y_s` is the mean of the samples between :math:`a` and :math:`b`.

"""

data = all_data[:t]

t0 = 0

horizon = len(all_data)

delta = self.delta

if delta is None:

delta = 1.0 / max(1, horizon)

mean_before = 0

mean_after = np.mean(data[t0 : t+1])

for s in range(t0, t):

# DONE okay this is efficient we don't compute the same means too many times!

y = data[s]

mean_before = (s * mean_before + y) / (s + 1)

mean_after = ((t + 1 - s + t0) * mean_after - y) / (t - s + t0)

# compute threshold

threshold = threshold_SubGaussianGLR(t0, s, t, delta=delta, sigma=self.sigma, joint=self.joint)

glr = abs(mean_before - mean_after)

if glr >= threshold:

# print(f"DEBUG: t0 = {t0}, t = {t}, s = {s}, horizon = {horizon}, delta = {delta}, threshold = {threshold} and mu(s+1, t) = {mu(s+1, t)}, and mu(t0, s) = {mu(t0, s)}, and and glr = {glr}.")

return True

return False

List of all Python algorithms¶

[135]:

all_CD_algorithms = [

Monitored, CUSUM, PHT,

GaussianGLR, BernoulliGLR, SubGaussianGLR

]

Comparing the different implementations¶

I now want to compare, on a simple non stationary problem, the efficiency of the different change detection algorithms, in terms of:

speed of computations (we should see that naive Python is much slower than Numba, which is also slower than the Cython version),

memory of algorithms? I guess we will draw the same observations,

But most importantly, in terms of:

detection delay, as a function of the amplitude of the breakpoint, or number of prior data (from \(t=1\) to \(t=\tau\)), or as a function of the parameter(s) of the algorithm,

probability of false detection, or missed detection.

[136]:

def str_of_CDAlgorithm(CDAlgorithm, *args, **kwargs):

detector = CDAlgorithm(*args, **kwargs)

return str(detector)

Generating some toy data¶

[137]:

# With 1 arm only! With 1 change only!

toy_problem_piecewise = lambda firstMean, secondMean, tau: lambda horizon: {

"listOfMeans": [

[firstMean], # 0 to 499

[secondMean], # 500 to 999

],

"changePoints": [

0,

tau

],

}

[138]:

def get_toy_data(firstMean=0.5, secondMean=0.9, tau=None, horizon=100, gaussian=False):

if tau is None:

tau = horizon // 2

elif isinstance(tau, float):

tau = int(tau * horizon)

problem = toy_problem_piecewise(firstMean, secondMean, tau)

if gaussian:

data = piecewise_gaussian_samples(problem, horizon=horizon)

else:

data = piecewise_bernoulli_samples(problem, horizon=horizon)

data = data.reshape(horizon)

return data

It is now very easy to get data and “see” manually on the data the location of the breakpoint:

[74]:

get_toy_data(firstMean=0.1, secondMean=0.9, tau=0.5, horizon=100)

[74]:

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 1., 0., 0., 0., 0., 0., 0.,

1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.,

1., 1., 1., 1., 0., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1.,

0., 1., 0., 1., 1., 0., 1., 1., 1., 1., 0., 1., 1., 0., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

[75]:

get_toy_data(firstMean=0.1, secondMean=0.9, tau=0.2, horizon=100)

[75]:

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 0., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 0., 0., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1., 0., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 0., 1.])

[76]:

get_toy_data(firstMean=0.1, secondMean=0.4, tau=0.5, horizon=100)

[76]:

array([0., 0., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1.,

1., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 1., 0., 1., 0., 0., 0.,

1., 0., 0., 1., 0., 0., 1., 0., 0., 1., 1., 1., 0., 1., 0., 0., 1.,

0., 0., 1., 0., 0., 0., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0., 0.,

1., 0., 0., 1., 1., 0., 0., 1., 0., 1., 0., 1., 0., 0., 0.])

And similarly for Gaussian data, we clearly see a difference around the middle of the vector:

[77]:

get_toy_data(firstMean=0.1, secondMean=0.9, tau=0.5, horizon=20, gaussian=True)

[77]:

array([ 0.28359652, -0.01616356, -0.48312871, 0.31544065, 0.14213706,

0.30068739, 0.13366152, 0.15525014, 0.15127236, 0.8348038 ,

0.86049004, 1.28897058, 0.80222673, 0.83035987, 1.26805377,

0.9509542 , 1.00407429, 1.65299813, 1.2069301 , 1.06948091])

Of course, we want to check that detecting the change becomes harder when:

the gap \(\Delta = |\mu^{(2)} - \mu^{(1)}|\) decreases,

the number of samples before the change decreases (\(\tau\) decreases),

the number of samples after the change decreases (\(T - \tau\) decreases).

[139]:

# Cf. https://stackoverflow.com/a/36313217/

from IPython.display import display, Markdown

[140]:

def check_onemeasure(measure, name,

firstMean=0.1,

secondMean=0.4,

tau=0.5,

horizon=100,

repetitions=50,

gaussian=False,

unit="",

list_of_args_kwargs=None,

CDAlgorithms=None,

):

if CDAlgorithms is None:

CDAlgorithms = tuple(all_CD_algorithms)

if isinstance(tau, float):

tau = int(tau * horizon)

print(f"\nGenerating toy {'Gaussian' if gaussian else 'Bernoulli'} data for mu^1 = {firstMean}, mu^2 = {secondMean}, tau = {tau} and horizon = {horizon}...")

results = np.zeros((repetitions, len(CDAlgorithms)))

list_of_args = [tuple() for _ in CDAlgorithms]

list_of_kwargs = [dict() for _ in CDAlgorithms]

for rep in tqdm(range(repetitions), desc="Repetitions"):

data = get_toy_data(firstMean=firstMean, secondMean=secondMean, tau=tau, horizon=horizon, gaussian=gaussian)

for i, CDAlgorithm in enumerate(CDAlgorithms):

if list_of_args_kwargs:

list_of_args[i], list_of_kwargs[i] = list_of_args_kwargs[i]

results[rep, i] = measure(data, tau, CDAlgorithm, *list_of_args[i], **list_of_kwargs[i])

# print and display a table of the results

markdown_text = """

| Algorithm | {} |

|------|------|

{}

""".format(name, "\n".join([

"| {} | ${:.3g}${} |".format(

str_of_CDAlgorithm(CDAlgorithm, *list_of_args[i], **list_of_kwargs[i]),

mean_result, unit

)

for CDAlgorithm, mean_result in zip(CDAlgorithms, np.mean(results, axis=0))

]))

print(markdown_text)

display(Markdown(markdown_text))

return results

[141]:

def eval_CDAlgorithm(CDAlgorithm, data, t, *args, **kwargs):

detector = CDAlgorithm(*args, **kwargs)

return detector.detect(data, t)

Checking time efficiency¶

I don’t really care about memory efficiency, so I won’t check it.

[142]:

import time

[143]:

def time_efficiency(data, tau, CDAlgorithm, *args, **kwargs):

startTime = time.time()

horizon = len(data)

for t in range(0, horizon + 1):

_ = eval_CDAlgorithm(CDAlgorithm, data, t, *args, **kwargs)

endTime = time.time()

return endTime - startTime

To benchmark each of the CD algorithm, we can use the `line_profiler <https://github.com/rkern/line_profiler#line_profiler>`__ module and it’s lprun magic.

[148]:

!pip3 install line_profiler >/dev/null

[153]:

%lprun -f Monitored.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[Monitored])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.00534$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.00534\) seconds |

\(20\%\) of the time is spent computing the threshold, and \(55\%\) is spent computing the two sums: we cannot optimize these!

[154]:

%lprun -f CUSUM.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[CUSUM])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.137$ seconds |

Algorithm |

Time |

|---|---|

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.137\) seconds |

\(10\%\) of the time is spent computing the threshold, and about \(10\%\) to \(25\%\) are spent on the few maths computations that can hardly be optimized.

[155]:

%lprun -f PHT.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[PHT])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.224$ seconds |

Algorithm |

Time |

|---|---|

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.224\) seconds |

\(10\%\) of the time is spent computing the threshold, and about \(10\%\) to \(25\%\) are spent on the few maths computations that can hardly be optimized.

[156]:

%lprun -f GaussianGLR.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[GaussianGLR])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.399$ seconds |

Algorithm |

Time |

|---|---|

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.399\) seconds |

\(30\%\) of the time is spent computing the threshold (at every step it must be recomputed!), and about \(10\%\) to \(25\%\) are spent on the few maths computations that can hardly be optimized.

[157]:

%lprun -f BernoulliGLR.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[BernoulliGLR])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.307$ seconds |

Algorithm |

Time |

|---|---|

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.307\) seconds |

\(30\%\) of the time is spent computing the threshold (at every step it must be recomputed!), and about \(10\%\) to \(25\%\) are spent on the few maths computations that can hardly be optimized.

[163]:

%lprun -f SubGaussianGLR.detect check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=200, unit=" seconds", CDAlgorithms=[SubGaussianGLR])

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 100 and horizon = 200...

| Algorithm | Time |

|------|------|

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.176$ seconds |

Algorithm |

Time |

|---|---|

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.176\) seconds |

\(30\%\) of the time is spent computing the threshold (at every step it must be recomputed!), and about \(10\%\) to \(25\%\) are spent on the few maths computations that can hardly be optimized.

For examples:

[164]:

_ = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=100, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.4, tau = 50 and horizon = 100...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.000568$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.00856$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0198$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.0265$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.0163$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.0141$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.000568\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.00856\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0198\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0265\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0163\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.0141\) seconds |

[165]:

_ = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.4, tau=0.5, horizon=100, unit=" seconds", gaussian=True)

Generating toy Gaussian data for mu^1 = 0.1, mu^2 = 0.4, tau = 50 and horizon = 100...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.000694$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0109$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0256$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.0359$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.0224$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.0182$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.000694\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0109\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0256\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0359\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0224\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.0182\) seconds |

The two GLR are very slow, compared to the Monitored approach, and slow compared to CUSUM or PHT!

[166]:

%%time

_ = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.2, tau=0.5, horizon=100, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.2, tau = 50 and horizon = 100...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.000693$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0117$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0276$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.0378$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.0237$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.024$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.000693\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0117\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0276\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0378\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0237\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.024\) seconds |

CPU times: user 6.2 s, sys: 28 ms, total: 6.23 s

Wall time: 6.43 s

Let’s compare the results for \(T=100\), \(T=500\), \(T=1000\):

[170]:

%%time

results_T100 = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.9, tau=0.5, horizon=100, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.9, tau = 50 and horizon = 100...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.000614$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.00824$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.0214$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.0188$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.0115$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.00945$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.000614\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.00824\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.0214\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0188\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.0115\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.00945\) seconds |

CPU times: user 3.59 s, sys: 12 ms, total: 3.6 s

Wall time: 3.59 s

[171]:

%%time

results_T500 = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.9, tau=0.5, horizon=500, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.9, tau = 250 and horizon = 500...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.00808$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.251$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.374$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $0.333$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.194$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.164$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.00808\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.251\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.374\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.333\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.194\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.164\) seconds |

CPU times: user 1min 6s, sys: 87.6 ms, total: 1min 6s

Wall time: 1min 6s

[172]:

%%time

results_T1000 = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.9, tau=0.5, horizon=1000, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.9, tau = 500 and horizon = 1000...

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.0162$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $0.943$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $1.3$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $1.1$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $0.635$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $0.552$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.0162\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(0.943\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(1.3\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(1.1\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(0.635\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(0.552\) seconds |

CPU times: user 3min 47s, sys: 319 ms, total: 3min 47s

Wall time: 3min 47s

[193]:

%%time

results_T2000 = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.9, tau=0.5, horizon=2000, repetitions=10, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.9, tau = 1000 and horizon = 2000...

/usr/local/lib/python3.6/dist-packages/numpy/core/fromnumeric.py:2920: RuntimeWarning: Mean of empty slice.

out=out, **kwargs)

/usr/local/lib/python3.6/dist-packages/numpy/core/_methods.py:85: RuntimeWarning: invalid value encountered in double_scalars

ret = ret.dtype.type(ret / rcount)

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.0321$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $3.71$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $5.15$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $3.96$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $2.27$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $2.05$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.0321\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(3.71\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(5.15\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(3.96\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(2.27\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(2.05\) seconds |

CPU times: user 2min 51s, sys: 283 ms, total: 2min 51s

Wall time: 2min 51s

[202]:

%%time

results_T2500 = check_onemeasure(time_efficiency, "Time", firstMean=0.1, secondMean=0.9, tau=0.5, horizon=2500, repetitions=10, unit=" seconds")

Generating toy Bernoulli data for mu^1 = 0.1, mu^2 = 0.9, tau = 1250 and horizon = 2500...

/usr/local/lib/python3.6/dist-packages/numpy/core/fromnumeric.py:2920: RuntimeWarning: Mean of empty slice.

out=out, **kwargs)

/usr/local/lib/python3.6/dist-packages/numpy/core/_methods.py:85: RuntimeWarning: invalid value encountered in double_scalars

ret = ret.dtype.type(ret / rcount)

| Algorithm | Time |

|------|------|

| Monitored($w=80$, $b=\sqrt{\frac{w}{2} \log(2 T^2)}$) | $0.0484$ seconds |

| CUSUM($\varepsilon=0.5$, $M=50$, $h=$'auto') | $5.74$ seconds |

| PHT($\varepsilon=0.5$, $M=50$, $h=$'auto') | $7.52$ seconds |

| Gaussian-GLR($h_0=1$, $\delta=auto$) | $5.5$ seconds |

| Bernoulli-GLR($h_0=1$, $\delta=auto$) | $3.41$ seconds |

| SubGaussian-GLR($\delta=$0.01, $\sigma=$0.25, joint) | $3.18$ seconds |

Algorithm |

Time |

|---|---|

Monitored(\(w=80\), \(b=\sqrt{\frac{w}{2} \log(2 T^2)}\)) |

\(0.0484\) seconds |

CUSUM(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(5.74\) seconds |

PHT(\(\varepsilon=0.5\), \(M=50\), \(h=\)’auto’) |

\(7.52\) seconds |

Gaussian-GLR(\(h_0=1\), \(\delta=auto\)) |

\(5.5\) seconds |

Bernoulli-GLR(\(h_0=1\), \(\delta=auto\)) |

\(3.41\) seconds |

SubGaussian-GLR($:nbsphinx-math:delta`=$0.01, $:nbsphinx-math:sigma`=$0.25, joint) |

\(3.18\) seconds |

CPU times: user 4min 13s, sys: 239 ms, total: 4min 14s

Wall time: 4min 14s

The three GLR and CUSUM and PHT are comparable, and the Bernoulli GLR is essentially the most efficient, except for Monitored which is the only one to be way faster.

When going from a horizon of \(T=100\) to \(T=500\) and \(T=1000\), we see that Monitored time complexity is essentially constant, while the complexity of CUSUM, PHT and all the GLR tests blows up quadratically:

[203]:

data_X = np.array([100, 500, 1000, 2000, 2500])

data_Y = [

[

np.mean(results_T100, axis=0)[i],

np.mean(results_T500, axis=0)[i],

np.mean(results_T1000, axis=0)[i],

np.mean(results_T2000, axis=0)[i],

np.mean(results_T2500, axis=0)[i],

]

for i in range(len(all_CD_algorithms))

]

[204]:

import matplotlib.pyplot as plt

[205]:

fig = plt.figure()

for i, alg in enumerate(all_CD_algorithms):

plt.plot(data_X, data_Y[i], 'o-', label=alg.__name__, lw=3)

plt.legend()

plt.xlabel("Time horizon $T$")

plt.ylabel("Time complexity in seconds")

plt.title("Comparison of time complexity efficiency of different CD algorithms")

plt.show()

[205]:

[<matplotlib.lines.Line2D at 0x7f6a283559b0>]

[205]:

[<matplotlib.lines.Line2D at 0x7f6a28355cf8>]

[205]:

[<matplotlib.lines.Line2D at 0x7f6a28355e10>]

[205]:

[<matplotlib.lines.Line2D at 0x7f6a27da5160>]

[205]:

[<matplotlib.lines.Line2D at 0x7f6a27da5470>]

[205]:

[<matplotlib.lines.Line2D at 0x7f6a27da5780>]

[205]:

<matplotlib.legend.Legend at 0x7f6a2833ddd8>

[205]:

Text(0.5, 0, 'Time horizon $T$')

[205]:

Text(0, 0.5, 'Time complexity in seconds')

[205]:

Text(0.5, 1.0, 'Comparison of time complexity efficiency of different CD algorithms')

We can fit time complexity \(C^{Algorithm}(T)\) as a function of \(T\) in the form \(C(T) \simeq a T^b + c\). Using the function `scipy.optimize.curve_fit <https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.curve_fit.html>`__, it is very easy:

[206]:

from scipy.optimize import curve_fit

[221]:

def time_complexity_general_shape(T, a, b, c):

return a * T**b + c

[223]:

for i, alg in enumerate(all_CD_algorithms):

popt, _ = curve_fit(time_complexity_general_shape, data_X, data_Y[i])

a, b, c = popt

print(f"For algorithm {alg.__name__},\n\ta = {a:.3g}, b = {b:.3g}, c = {c:.3g} is the best fit for C(T) = a T^b + c")

For algorithm Monitored,

a = 1.96e-06, b = 1.28, c = 0.00103 is the best fit for C(T) = a T^b + c

For algorithm CUSUM,

a = 1.21e-06, b = 1.96, c = 0.00036 is the best fit for C(T) = a T^b + c

For algorithm PHT,